Summary: As AI becomes more capable every day, you could get the impression that human intelligence isn’t so special after all. But guess what? Nothing could be further from the truth. Artificial intelligence is human intelligence, repackaged by Big Tech.

↓ Go deeper (12 min)

As the days grow darker and colder, and we inch towards the end of the year, it’s reflection time.

Throughout this past year, I’ve written about the hallucination problem, the metaphors and analogies used, and how artificial intelligence is humanity’s biggest vanity project. I talked about how the map is not the territory, the relationship between AI and religiosity, and what it means to give AI agents agency.

I’m deeply indebted to linguists and cognitive scientists like Emily M. Bender, Melanie Mitchell, Joanna Bryson, Gary Marcus, Walid Saba (may his soul rest in peace), philosophers like Dreyfus, Kahneman, Chomsky, and Wittgenstein, and AI researchers like Francois Chollet, Subbarao Kambhampati, and Amanda Askell, to name a few. I couldn’t have written any of these articles without building off their work.

The red thread for me has always been to get a better understanding of what artificial intelligence is and isn’t, in an attempt to come to terms with it.

It’s that last part, the coming to terms with, which I’d like to reflect on today.

“PhD-levels of intelligence”

Usually, coming to terms with something involves a combination of loss and acceptance. When we lose a loved one, for example, we’re confronted with the presence of their absence and we need to learn to live without them.

We can also experience loss collectively, as a group of people. As humankind.

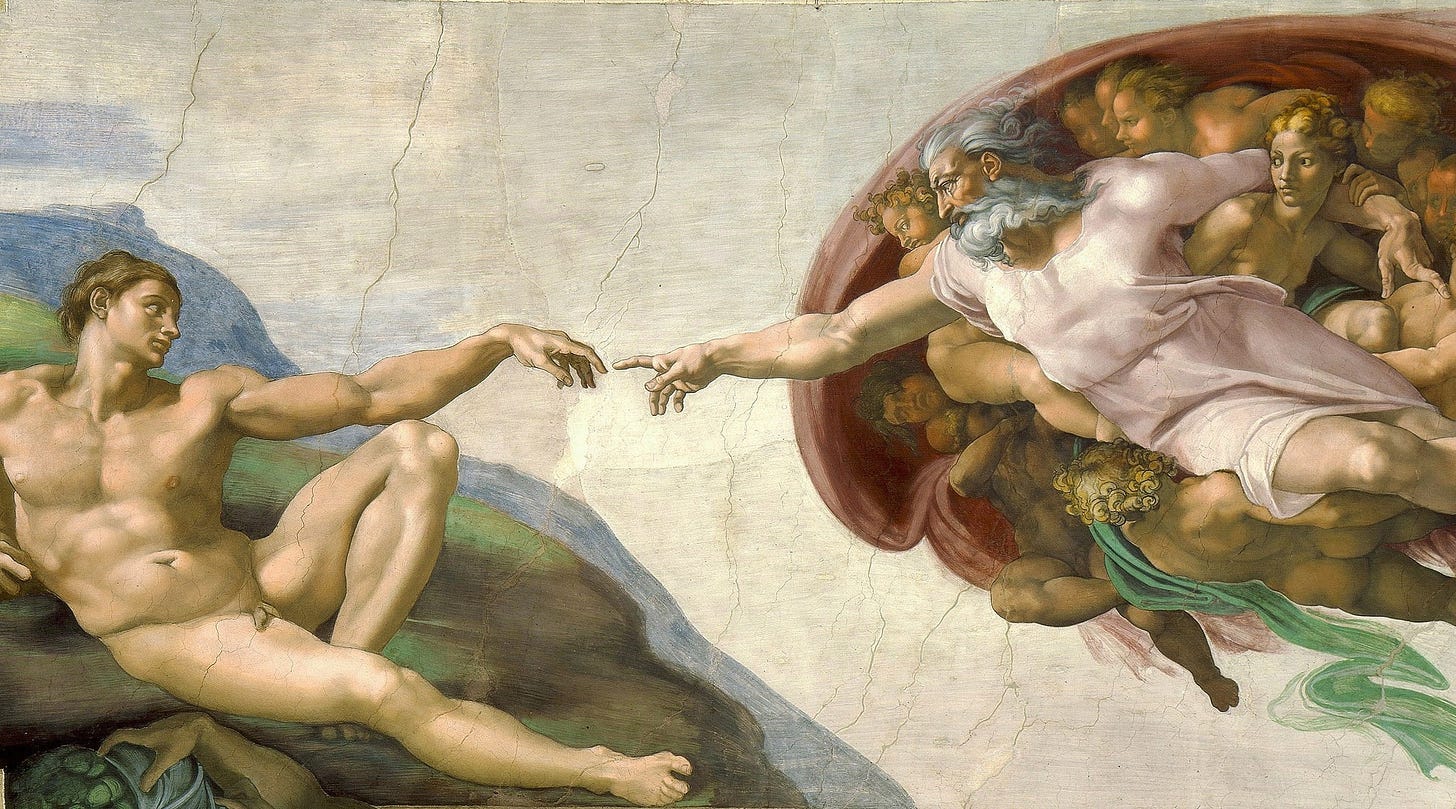

It was Copernicus and Galileo who taught us that we are not the center of everything and that Earth is but a planet among billions. Darwin destroyed the notion of human exceptionalism by revealing our evolutionary kinship with all life (as it turns out, we are nothing but monkeys on typewriters). And last but not least, Nietzsche stared deep into the abyss and saw that it was time for humanity to acknowledge the absence of any form of divine meaning.

To this day, humankind wrestles with these losses.

But progress hasn’t stopped. To the contrary, it has only accelerated. Modern-day technology has gone on to transform every part of society, and most recently, one technology in particular has forced us to rethink everything. Possibly, the final blow to our collective sense of self-worth: artificial intelligence.

It’s easy to see how. AI’s ever-expanding capabilities question the uniqueness of human intelligence itself. If you believe the dominant narrative, echoed by the majority of tech leaders and AI researchers, you could get the impression that AI systems have now reached “PhD-levels of intelligence” and are well on track of surpassing us.

You could get the impression that AI is already more knowledgeable, creative, and persuasive than we are. You could get the impression that humanity is soon made obsolete.

Big Tech’s dirty little secret

Nothing could be more further from the truth. And buying into this story we stand to lose a lot more, I may add.

Here’s what is really happening: a handful of companies have gobbled up all of human knowledge, literature, math and science. Everything ever published on the Internet, every scientific paper, every book and every digital work of art. Then they turned all of this data into a chatbot, just so they could pretend they created something intelligent and serve us it back to us, neatly packaged in a subscription of a mere $200/month.

As if that’s not enough, there’s a staggering amount of human labor involved in turning a pre-trained model into something helpful as ChatGPT or Claude (and I’m not talking about engineers here). Data annotation for AI is so booming that Uber is reportedly building a fleet of gig workers to label data for AI models.

In a recent paper, Emily M. Bender writes about this type of hidden labor, also known as “ghostwork”:

Human effort is everywhere in these systems: labeling data, design and evaluation, and as a backstop for when the computer fails on some input. Tech firms ship those tasks off to microworkers on crowdsourcing platforms, hiding often grueling labor (e.g., content moderation) and the humanity of the microworkers behind the illusion of ‘AI’.

Here’s how Andrej Karpathy pointedly summarized it on X:

Looking at it this way, it’s hard to view AI as some kind of magic intelligence-in-the-sky. It’s an amalgamation of all the text, visual, and auditory data available online, taken without permission (did I already mention that?) to train an algorithm that generates plausible-sounding but not always accurate responses on the fly.

In other words, artificial intelligence is human intelligence, remixed.

The smartest most stupid technology ever created

Is it impressive? Absolutely. Yet, I cannot stress enough that generative AI is the smartest, most dumbest technology ever created.

When you use it on a regular basis, you’ll find that it is both extremely smart and extremely stupid at the same time. It will solve advanced math problems, but can’t tell the difference between a glass half-full and one filled to the brim.

Notice how ChatGPT is happy to gaslight me into believing the image it created satisfies the requirements, which is of course a product of one of its most fatal flaws: it doesn’t know what it knows.

The point here is not to ridicule, but to demystify. Because to understand how AI succeeds, we must look at how it fails. There’s a reason why LLMs suck at chess (often suggesting illegal moves) or even a game as simple as Tic Tac Toe, but they’re more than capable of writing a three-page essay about Friedrich Nietzsche in under 10 seconds. It’s good at what it has seen before.

This is even more true for coding and math problems, where a lot of high quality data exists and the outcomes are terribly concrete: a piece of code either works or it doesn’t. This makes these domains perfectly eligible for neural networks and reinforcement learning to optimize, as it plays to their strengths. Learning from rewards. If someone else has already solved a version of the problem you’re trying to solve, chances are the LLM can do it too.

But the credit for solving these problems shouldn’t go to the AI. The credit should go to all the humans who came before, who didn’t have an AI to guide them. It’s generations and generations of humans doing deep thinking work that you now get to access via a chat interface on your iPhone!

So, the next time you use Dall-E to create a beautiful artwork, remind yourself that you are relying on countless people who have been honing their skills for years. And when you use Sora to create a video, know that you are relying on countless people shooting stunning visual footage, who spent considerable time and money on better equipment, cameras, and lighting. And when you ask ChatGPT to write you a story, realize that you are relying on countless people who have been telling and writing stories for centuries, millennia even, which all have been used to train the AI.

Corporations may have captured everything that is human and put a big fat price sticker on it, as if they own it. But who you really should be thanking this Christmas are your fellow Earthlings, instead of Microsoft, Google or OpenAI.

Peace, love and kindness,

— Jurgen

Not done reading yet?

Here are some of my recent articles that you may have missed:

This is written incredibly well; I agree with all the remarks you made. As a user of artificial intelligence services such as ChatGPT and Dall-E, I sometimes do make remarks about how "intelligent" the service is. You make a fantastic point about humans being the reason ChatGPT, Dall-E, and other services are so smart. It had to be taught by a human, and books, reports, stories, statistics, etc. that were made by humans. I have no disagreement with the fact that artificial intelligence is only as smart at humans, but I believe that is because we are still in the early stages of artificial intelligence development. What will happen when it is much more advanced? I am assuming that it will have to capable of "thinking" on its own in order for it to actually be smarter than us. What are your thoughts?

I love the way you write about this, thank you for capturing these points with punch and care. I am going to dive into more of your posts now. What a treat, human-flavoured writing. It's my belief we will always need original writing from humans.