Metaphors And Analogies

The words to describe AI say everything about us.

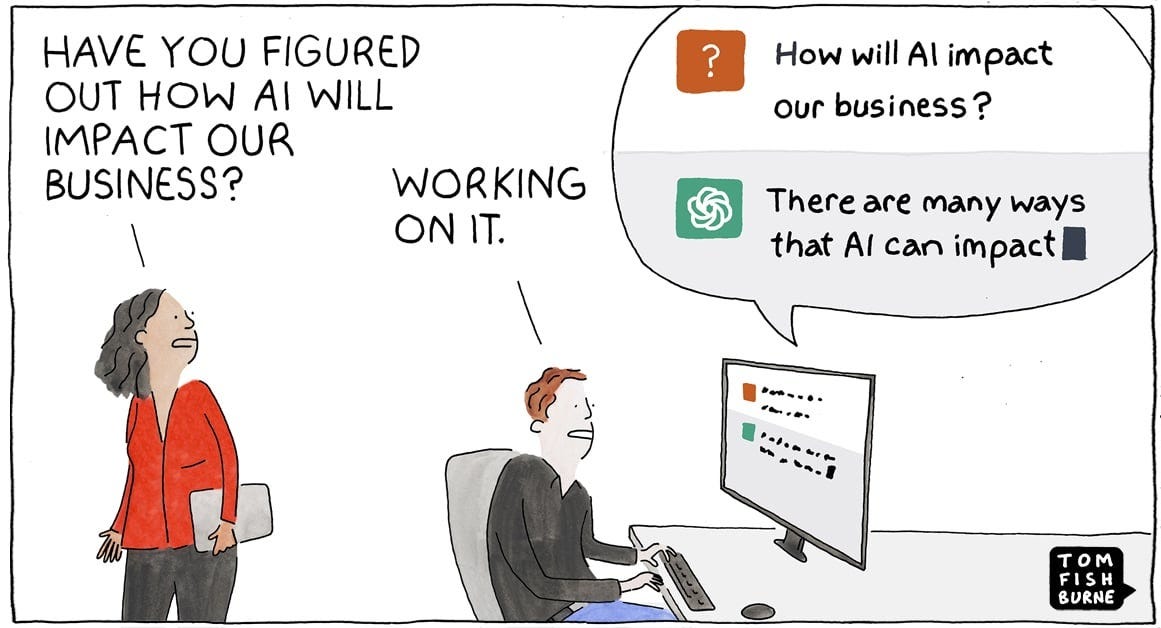

Summary: The field of AI leans heavily on anthropomorphizing language, for various reasons. These systems ‘think,’ ‘reason,’ and ‘hallucinate’. But what if these metaphors and analogies are making things more complicated? What if they obscure more than they reveal?

↓ Go deeper (10 min)

There’s a famous quote from a speech by the young, bearded Steve Jobs during a prestigious dinner:

“I think one of the things that really separates us from the high primates is that we’re tool builders. (…) And that’s what a computer is to me. What a computer is to me is it’s the most remarkable tool that we’ve ever come up with, and it’s the equivalent of a bicycle for our minds.”

A bicycle for the mind. I always liked that analogy. Everybody gets it.

When it comes to AI, plenty of metaphors are thrown around. Some good, some bad. A commonly heard one is LLMs being ‘calculators for words’. It works well, because LLMs are basically made up of maths. At the same time, calculators are one-hundred percent reliable, while LLMs… are not.

Generally speaking, metaphors and analogies make things easy-to-understand, but they can also be used to obfuscate our understanding. They can simplify or complicate. Reveal or hide.

The metaphor as dream or ideal

To my surprise, as I was working on this piece, I saw published a research article in Science titled The metaphors of artificial intelligence. She writes:

“The disagreements in the AI world on how to think about LLMs are starkly revealed in this diverse array of metaphors. Given our limited understanding of the impressive feats and unpredictable errors of these systems, it has been argued that “metaphors are all we have for the moment to circle that black box.””

And:

The field of AI has always leaned heavily on metaphors. AI systems are called “agents” that have “knowledge” and “goals”; LLMs are “trained” by receiving “rewards”; “learn” in a “self-supervised” manner by “reading” vast amounts of human-generated text; and “reason” using a method called chain of “thought.” These, not to mention the most central terms of the field—neural networks, machine learning, and artificial intelligence—are analogies with human abilities and characteristics that remain quite different from their machine counterparts.

Mitchell rightfully points out that most of the metaphors around AI skew towards anthropomorphization. And it has been like that since the inception of the field in the 50’s!

This is of course, as I wrote last week, because artificial intelligence is humanity’s biggest vanity project. The words express a wish. They hint at the underlying dream or ideal of the maker — us, the folks building these systems — which is the hope that the metaphor will one day become reality.

On surface level, this looks innocent. It’s just words, right? Well, it’s more complicated than that.

AI assistants like Claude, ChatGPT, and Copilot (take a look at their Instagram page) are designed to act like they have a mind. They are made to have a sense of self — a identifiable persona — and their behavior gives off the impression that they are capable of empathy and affection (which of course they are not).

It’s easy to see how someone, who doesn’t know much about the inner workings of these systems, could overestimate their capabilities. Trust them, where trust should not be given.

More surprisingly is the fact that many AI researchers, who do know their inner workings, also choose to see AI as having a mind. They give these systems IQ tests, personality quizzes, and bar exams (which is basically standard practice across the industry) and don’t even think twice about it.

Last but not least, the big AI labs are happy to rely on this metaphor as part of their marketing and even legal strategy. Mitchell explains:

“A principal argument of the defendants is that AI training on copyrighted materials is “fair use,” an argument based on the idea that LLMs are like human minds. Microsoft CEO Satya Nadella, pushing back on such lawsuits, put it this way: “If I read a set of textbooks and I create new knowledge, is that fair use? (…) If everything is just copyright then I shouldn’t be reading textbooks and learning because that would be copyright infringement.””

Now I don’t believe they believe that, but it’s clever rhetorics. And it might even work.

The chore we’re trying to solve

I don’t know about you, but I’ve always wondered what words Steve Jobs would’ve used to describe the technology we have today. If the computer is the bicycle of the mind, what does that make AI… an e-bike? After all, the promise of AI was that it was going to make us smarter. Faster, more productive.

But is it? What if I told you it’s well on its way to become the crutch of the mind?

What if I told you that AI slop is on the rise, coding tools are decreasing code quality, and educators using dodgy AI detection software are falsely accusing students of cheating? That hospitals are adopting error-prone AI transcription tools, AI explanations in radiology may lead to over-reliance, and researchers are misusing ChatGPT for scientific research?

I honestly think CNET’s Bridget Carey said it best, in a recent video covering the new features of Apple Intelligence:

“What’s the chore we’re trying to solve for? Is communication the chore? Is reading comprehension and critical thinking the chore? (…) What is the value of having the computer doing the thinking for us?”

What I think we need, is to see AI for what it really is. It is artificial intelligence. Artificial intelligence.

Not a solution to a problem, but a tool in our toolbox. And with that in mind, we need to build products, applications, and features that actually make us feel brighter, more curious, and creative. Instead of dopamine-draining and amplifying the worst in us, AI should help us become better versions of ourselves.

What we need are new metaphors.

Kill them with kindness,

— Jurgen

Not done reading?

Friend of the newsletter, journalist , recently interviewed Melanie Mitchell for Dutch newspaper NRC. Here’s a link to his newsletter De hype is real and the interview.

P.S. Here are some of my recent articles that you may have missed:

Melanie Mitchell affected me and my pursuit of a Master's degree in genetic algorithms. Her work is recommended.

I liked the calculator for text analogy. Not sure about now but not for the reason you say, Jurgen.

An LLM is fully reliable as a piece of technology - it never fails to execute the expected calculations to produce the next token. I'd say this is another example of anthropomorphism. "Failure" means something else in the context of a human-like system. (Which an LLM is not)

When we call LLM hallucinations a failure we assume they were built for truthfulness, which they were not and cannot be... My preferred explicit position is that an LLM produce true things by accident, just as confabulations are as much of an accident. The same calculations get performed at the same scale in exactly the same way - the system functions perfectly without fail.

An LLM with no randomness, 0 temperature, is also perfectly predictable in its responses, so even the "reroll" mystique is taken out of the equation.

It is a reliable calculator that does not solve the problems we try to use it for.