Summary: A new AI device went viral last week, Friend’s $99 necklace. Friend’s pitch is that its a wearable designed to combat loneliness, but the internet wasn’t having it. Silicon Valley seems increasingly out of touch with the people they say they are building for and cracks are beginning to show.

↓ Go deeper (9 min)

Black Mirror is calling, they want their episode back. That’s what I thought when I saw this video for a new AI product called ‘Friend’. The internet agreed with me. The video garnered 100K views in the first 24 hours and the response was visceral. The top comment on YouTube reads: “God this world sucks.”

Not only does the promo seem to completely miss the mark, the device itself isn’t even available yet. Instead, you can pre-order it on their website for $99, the first ones won’t ship until Q1 2025.

We’re reminded of the go-to market of the Rabbit R1, securing over 50.000 pre-orders on the basis of a highly misleading demo. Misleading, because when the device finally shipped, it shipped with considerably less features than advertised, and the features that were available didn’t work.

Not too long after that, the Humane’s AI Pin launched, crashed, and burned. That company raised $230 million dollar, hired 200 people across San Francisco, New York, and other cities, and spent more than five years developing a device that turned out dead on arrival.

Frankly, Friend exhibits many of the same symptoms. Despite not having a product ready to ship, Avi Schiffmann, the CEO of Friend, a 21-year old Harvard dropout (classic!), raised $2.5 million in funding against a $50 million valuation from a handful of investors, of which he went on to spent $1.8 million on the domain name www.friend.com. If you think that’s ludicrous, Schiffmann himself called it a “no brainer”.

No moat

Yet its biggest red flag is that it has no moat. For those who don’t know, a moat is essentially a differentiating factor. It’s something that sets a company apart, be it technology, data, or a strong brand that makes it really hard for others to copy what you’re doing. For Humane it was suppose to be the hardware. Rabbit claimed to have pioneered a new type of AI model called LAM: a large action model, which, if that is even a real thing, it hasn’t proven to be very effective.

From the looks of it, the Friend-device is trivial to make: it’s a hanger with a microphone the size of a Babybel that connects to an app on your phone via bluetooth. It is so trivial to make, actually, that is already exists.

Then the AI must be what makes it special, right? Nope. Not only could you ask ChatGPT to roleplay, there are whole range of existing platforms designed specifically for the purpose of creating your own AI or chatting to personalities created by others, the most well-known being Character.AI and Replika.

The only difference is you now have this thing around your neck to prompt your AI friend that, I almost forgot to mention, will be listening in to everything you do all the time. Yeah, you read that right. It has to. In order to be your friend on-demand.

Deeply unserious

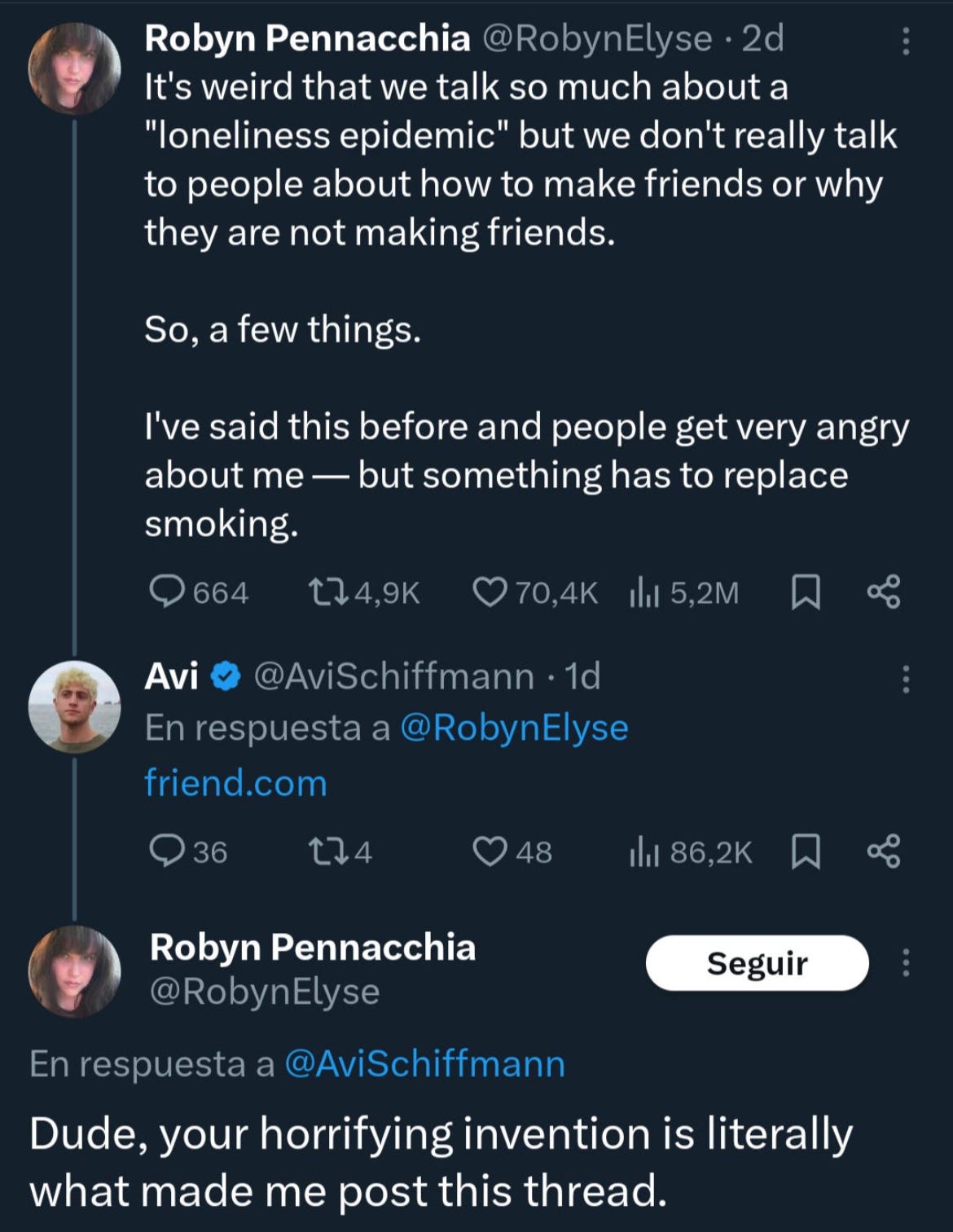

Of course, there’s this whole privacy rabbit hole we could go down to, or I could write a paragraph or two about how Schiffman is talking about this project as a solution to the loneliness epidemic. Either way, people are not having it.

If you ask me, it all adds up to a single conclusion: These are not serious people and these are not serious companies. They are not serious about building products that are genuinely useful to people. So, let’s stop pretending this is going to solve any real problems people face when longing for human connection.

For the record, it doesn’t mean Friend won’t sell any pendants. I expect the company to sell quite a few actually. Especially, when they start marketing them to kids and young adults. It’s not entirely unimaginable that Gen Z will fall in love with the idea of having an AI assistant swinging around their neck or attached to their pants as a keychain, wearing it not as a badge of loneliness but proudly, as a new form of social status.

But even if all of that turns out to be true, there’s no reason to think this would amount to a sustainable business. Evidence suggests that AI companions aren’t particularly profitable. Inflection, the company behind the personal companion Pi, was recently abandoned by its CEO Mustafa Suleyman and most of its staff, after raising $1.3 billion less than a year before. Investors were compensated and a shell of the company was left behind. And Character.AI, started two years ago by an ex-Google employee, was acqui-hired in similar fashion last week, when CEO Noam Shazeer announced that he and many of its talented employees are leaving and joining the research devision of Google Deepmind. All of Character.AI’s proprietary technology is being licensed to Google as well.

It signals that there wasn’t anything that even looked like a road to profitability for either of these companies. Both proved to be adventures short-lived and while AI companions are here to stay, the million dollar question remains: after the novelty wears off, who’s willing to pay?

All articles are free to read, always…

Teaching computers how to talk is a donation-based publication. There are no paywalls and no subscriber-only comment section. If you value my work and you’re a regular reader, consider showing your support.

Become a donor today. Cancel anytime.

I don't know what you're talking about, man.

I am currently wearing my Friend pendant (it's a pre-alpha prototype, don't worry about it) right next to my Humane AI Pin so they can talk to each other while I record their conversation using my Rabbit R1 and send the resulting video to Gemini to make a song about, which I'm hoping ChatGPT-4o will sing for me once I get access to the new Advanced Voice mode.

Life is good!

- this comment was proofread by Claude 3.5 Sonnet.

Great write up. The dystopian vibes are strong with this, and I’m sure the founder is fully aware, and just looking for a quick paycheck while the hype is still here.

Also, it's not even an assistant as far as I can tell. Just something that will randomly message you? It’s utility is basically zero, and it’s a sorry reflection of where we are in society if this is deemed useful/helpful/the future

I wrote about this also — https://www.trend-mill.com/p/ai-is-not-your-friend