Summary: Hallucinations are a persistent problem in the field of artificial intelligence. SimpleQA, an open-source benchmark released by OpenAI, helps probing AI models for factuality. The results confirm a longstanding hypothesis: LLMs don’t know what they don’t know.

↓ Go deeper (9 min)

Sometimes the most illuminating research gets the least attention. Last week, OpenAI published SimpleQA, a benchmark designed to assess factuality in large language models. Not a single soul wrote about it.

In the blog post on the OpenAI website, we can read:

“An open problem in artificial intelligence is how to train models that produce responses that are factually correct. Current language models sometimes produce false outputs or answers unsubstantiated by evidence, a problem known as “hallucinations”. Language models that generate more accurate responses with fewer hallucinations are more trustworthy and can be used in a broader range of applications.”

That’s very different language than what is used by Altman and others, who in the past have characterized hallucinations as “a feature, not a bug”. This has been bothering me a great deal, because everyone who’s trying to build with LLMs knows this not to be the case, struggling to deal with these models’ disregard for the truth.

If you ask me, every LLM provider should be forced to display the reliability of their models, just like the sugar content on the back of a bag of candy. Turns out, now we can.

LLMs don’t know what they don’t know

The goal of SimpleQA is to measure a scientific phenomenon known as ‘calibration’, or whether language models know what they don’t know.

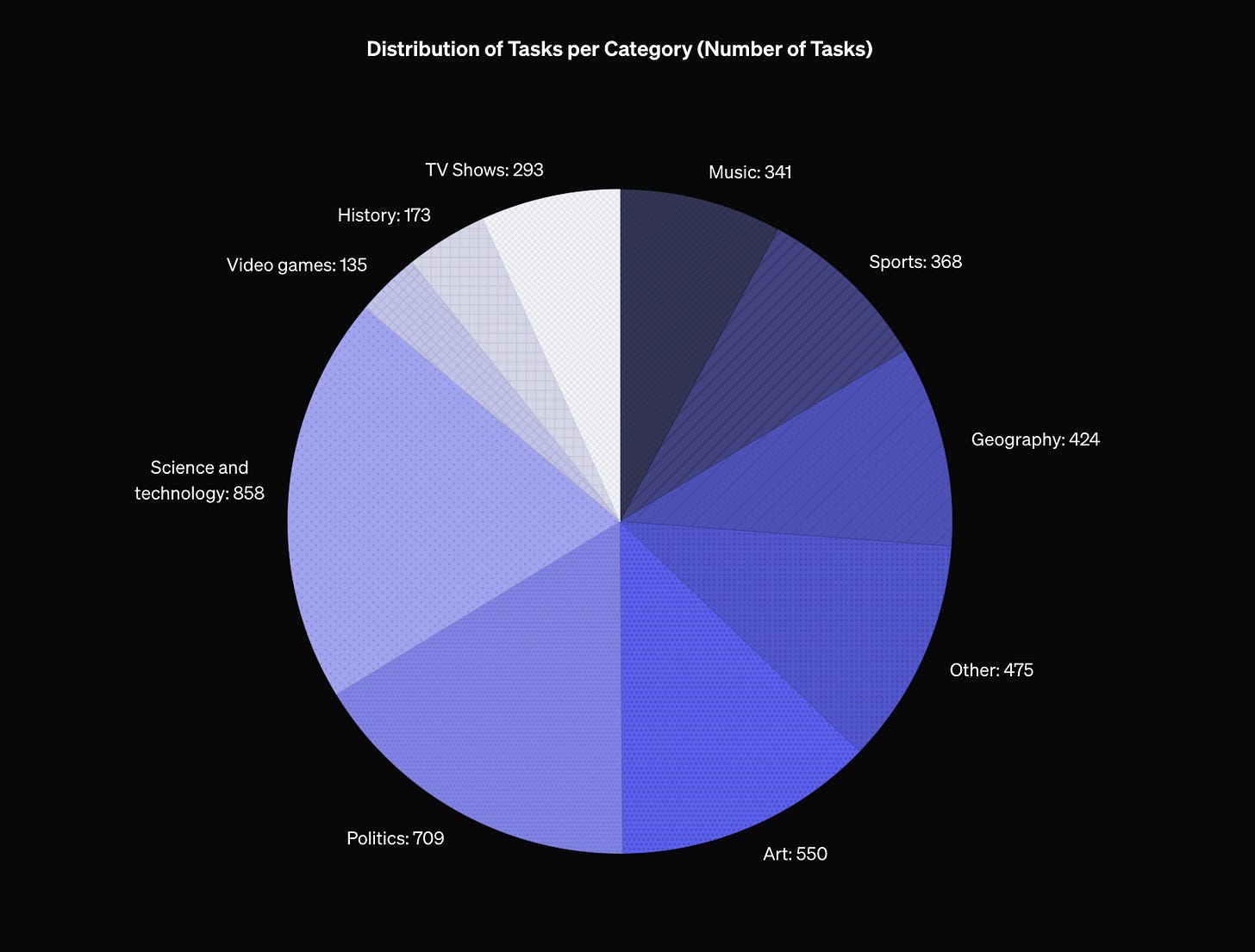

The benchmark is made up of 4,326 short, fact-seeking questions, collected by AI trainers. The questions were designed to have a single, indisputable answer and cover a wide range of topics.

When probing the model, one of three things can happen. The model gives a correct answer; the model confidently gives the wrong answer, or; the model refuses to answer the question.

Ideally, a model answers as many questions correctly as possible, while minimizing the number of incorrect answers. Now, let’s take a look at how OpenAI’s models score on their own benchmark.

In a staggering 60.8% of all cases, GPT4o confidently gives the wrong answer, and in only 2% of all cases does it not attempt to answer the question.

Their newly released reasoning model, o1, only performs marginally better. It hallucinates answers in 48.0% of the cases, mainly due to a larger percentage of refusals.

Hallucinations are the result of overconfidence

has often said that LLMs are “frequently wrong, never in doubt”. The shocking part is not that these models get it wrong 2 out of 3 times — it’s the fact these models cannot catch themselves doing it. This is precisely what makes hallucinations so pervasive!

There’s also ample evidence this is true for all frontier models. When testing Claude-3.5 Sonnet, similar results can be observed. According to the researchers, it answered fewer questions correctly than GPT-4o, but also refusing to answer more.

In an ideal world, LLMs could tell us when they’re unsure about an answer. Instead, these models consistently overstate their confidence.

It’s worth noting that little to no progress has been made on this front, despite models getting substantially bigger. The working theory is that hallucinations are inherent to the technology. Because LLMs are autoregressive, facts or knowledge stored in the model can only be accessed from a certain direction. This is best illustrated through the Reversal Curse, which showed that models that learn “A is B” don’t automatically generalize “B is A”. Another way of putting it: the input dictates the output.

This is why you cannot trust LLMs for things you’re not an expert on. And it’s also the reason why prompt engineering and mitigation strategies like retrieval augmented generation exist, and why companies like Google, OpenAI, and Perplexity are trying to blend LLMs with search.

In many ways, it feels like papering over the cracks. The underlying problem persists.

Kill them with kindness,

— Jurgen

Not done reading?

Here are some of my recent articles that you may have missed:

"This is best illustrated through the Reversal Curse, which showed that models that learn “A is B” don’t automatically generalize “B is A”. Another way of putting it: the input dictates the output"

Human intuition can often be like this, the similarities are quite striking.

"Well, which of these statements strikes you as more natural: "98 is approximately 100", or "100 is approximately 98"? If you're like most people, the first statement seems to make more sense. (Sadock 1977.) For similar reasons, people asked to rate how similar Mexico is to the United States, gave consistently higher ratings than people asked to rate how similar the United States is to Mexico. (Tversky and Gati 1978.)"

source: https://www.lesswrong.com/posts/4mEsPHqcbRWxnaE5b/typicality-and-asymmetrical-similarity

The term "hallucination" is also heavily loaded and another example of the prevalent problem of wishful mnemonics in AI. I think hallucinations are just the result of sampling OOD in a smooth approximation of language. For a language model to generalize (to generate sentences it has never seen in training) it has to come up with stuff. And stochastic language models come up wth stuff by building very similar sentences (low perplexity) to sentences in the training set. We can always take a truthful sentence in the training set and make a tiny tweak that makes it false. As long as you model language as Markov process conditioned on language itself, with no grounding in a world model, hallucinations are a feature, not even a bug.