When Models Go MAD

AI slop is flooding the internet and it's bad news for everyone.

Summary: As AI becomes dirt-cheap, more low-quality content will be dumped onto the internet. It fills our social media feeds, online forums, and even Wikipedia. Not only is ‘AI slop’ destined to pollute our online spaces, but according to researchers, it might even drive future AI models mad.

↓ Go deeper (10 min)

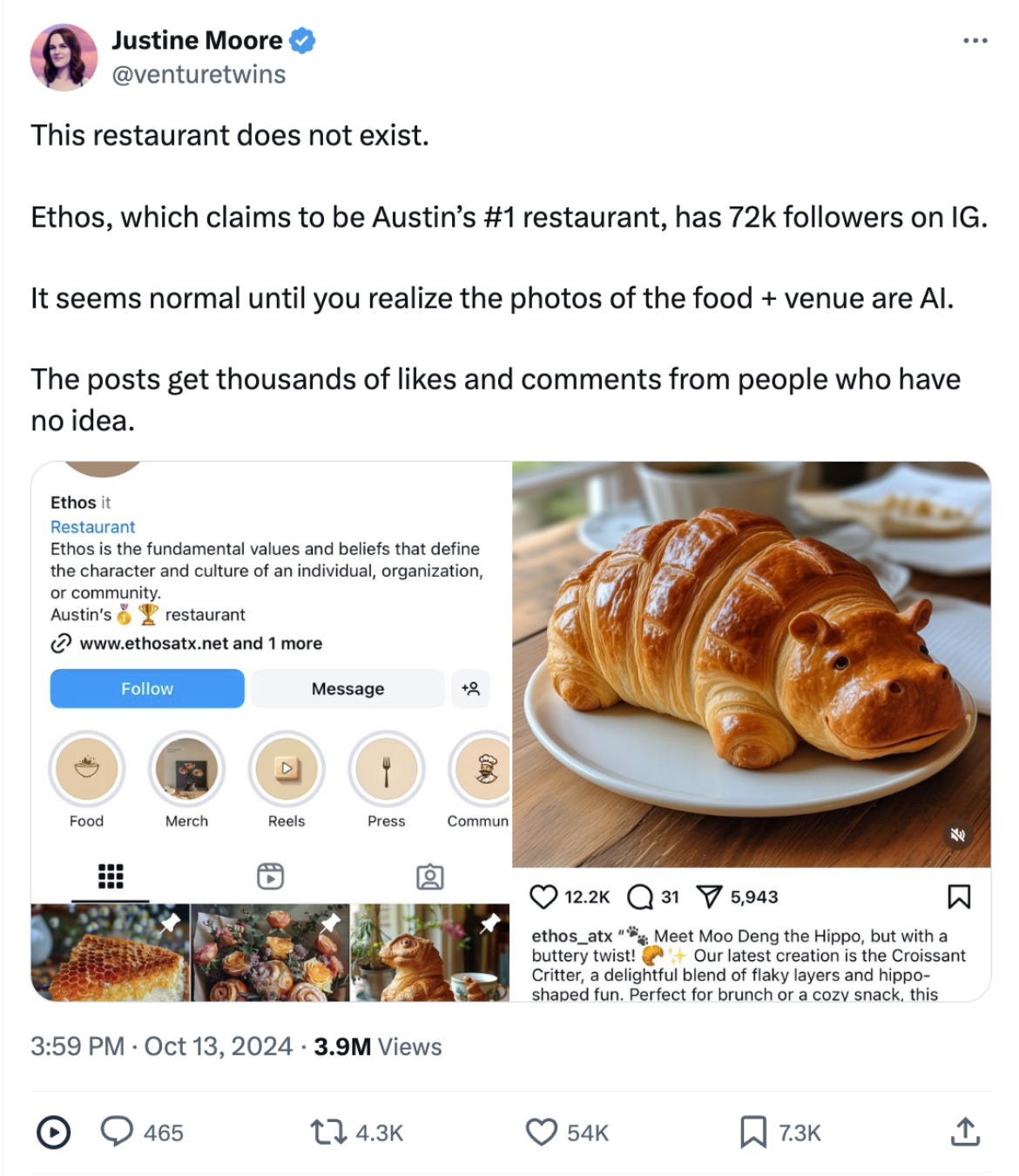

Have you heard of ‘AI slop’? It’s what spam was in the 90’s. An early champion of the term was developer , before it became mainstream. It describes the wave of low-quality AI-generated content that is currently flooding the web.

Don’t believe me? A recent pre-print paper found that at least 5% of new Wikipedia articles in August 2024 were AI generated, Facebook isn’t doing anything to stop AI generated images of Hurricane Helene, and Goodreads and Amazon are grappling with various AI generated book schemes scamming people into buying pulp. It’s only the tip of the iceberg.

This is not without consequence. As a writer and thinker, I cannot help but ask myself: what the Internet will look in 5 to 10 years? What will this amount to, if the problem is most likely only going to get worse?

The Great Blandness

Some of you may already see the writing on the wall, as do I. While AI insiders break their brains over existential threats from superintelligence, the immediate danger is much more mundane: AI generated content is making the world more bland.

It’s obvious to any frequent user of AI. Assistants like ChatGPT and Gemini give off telltale signs, certain words that they keep coming back to: “By meticulously delving into the intricate web of ideas…” That’s because generative AI models (not just language models, image generation tools also) are geared towards homogeneity.

And as we race towards a world where intelligence is “too cheap to meter”, the cost of producing AI slop falls to zero.

The slop will pile up, as every marketing department on Earth is adopting writing tools to “increase productivity”, AI-generated content rakes up millions of views and likes on platforms like X, Facebook and Instagram, and forums like Reddit, Stack Overflow, Quora are polluted with generic answers from bots. If we’re not careful, even Wikipedia, arguably the last bastion of collective human knowledge, could be swallowed whole.

Feeding AI its own work

This is not just bad for us, it could spell disaster for AI companies, too. If AI is trained on information scraped of the internet, and then people use it (willingly or unwillingly) to produce loads of AI slop, this content becomes part of the internet and eventually part of future AI models that are trained on information scraped of the internet…

Feeding AI models their own work could make them go MAD, according to researchers at Rice and Standford:

“As synthetic data from generative models proliferates on the Internet and in standard training datasets, future models will likely be trained on some mixture of real and synthetic data, forming an autophagous (i.e. self-consuming) loop.

(…)

Our primary conclusion across all scenarios is that without enough fresh real data in each generation of an autophagous loop, future generative models are doomed to have their quality (precision) or diversity (recall) progressively decrease.”

MAD is short for ‘model autophagy disorder’, a direct reference to mad cow disease, which was caused by cows eating feedstock containing bovine brain matter.

You’d be surprised, but training models on synthetic data is actually very trendy right now. Done well, it can actually enhance performance. However, aforementioned research suggests that at a certain point, model makers will see diminishing returns and eventually suffer model degradation.

It will happen slow but steady. A process that will incontrovertibly and irreversibly cause the dilution of truth and authenticity online. The hallucinations, fake citations, biases, and empty catch phrases — with every cycle they will get reinforced further, like a snake eating its own tail.

The enshittification of the internet

To turn the tide, insofar that is possible, we’re on our own I’m afraid. We’ll have to envision a different internet than the one we’re headed for.

We can’t rely on Big Tech. Because Big Tech is all-in on generative AI. Mark Zuckerberg is eagerly looking forward to a future where “we’re going to show you content that’s generated by an AI system that might be something that you’re interested in.” And so do Microsoft, Google, and OpenAI. They want you to use AI as much as you can, in order to make up for their multibillion dollar investments in AI CapEx.

To quote a fellow Substack writer, , who writes even more unapologetically than I do:

“I hope the general public realizes it’s absolutely fucking insane to let an AI think for you, to make decisions for you, to have any agency over your life at all. I hope we push back against feeds of AI content that’s been generated to try and suck us deeper into our device addiction under the guise of it being “what we want.”

Let us not slide into madness.

Stay tuned for more,

— Jurgen

So right! I have created a new Reddit sub r/croissanthippo to document the rise of AI crap slopped by the gullible.

My 2 cents:

If AI training relies on AI-generated content, the phrase 'Garbage in, Garbage out!' comes to mind. By prioritizing quantity over quality, we may be undermining human creativity and the ability of artists and writers to make a living. This could lead to a future where we are left with only legacy art and literature created by humans who are no longer producing new work or alive.

Moreover, our ability to create art and write is fundamental to human identity. By outsourcing human creativity to machines, we are undermining our unique ability and risking the homogenization of artistic expression.

Furthermore, we can do much worse by relying on AI for decision-making and problem-solving, as it could have long-term consequences for human agency and critical thinking. As we increasingly rely on machines to make decisions, we may lose the ability to think for ourselves and make informed choices/decisions.