System 2 Thinking

Learning to reason with LLMs.

Summary: AI labs are eager to solve System 2 thinking in AI in the hope of alleviating some of the inherent flaws of LLMs. Doing so could potentially unlock better planning and reasoning capabilities. OpenAI’s latest model release, o1, is touted as an early attempt at that.

↓ Go deeper (10 min)

LLMs are often likened to System 1 thinking in humans. This comparison stems from their ability to generate rapid-fire responses, based on knowledge and patterns that are instilled into the model during training.

The concept of System 1 and System 2 thinking was introduced by the late Nobel Prize-winning Israeli-American psychologist Daniel Kahneman, in his best-selling book Thinking, Fast and Slow (2011). According to Kahneman, System 1 thinking in humans is intuitive and automatic. It allows people to make snap decisions based on previous experiences (this is where a lot of our cognitive biases come into play). In contrast, System 2 is slow and contemplative. We use this kind of thinking for things that require more thoughtful consideration.

Big AI labs are now exploring ways to emulate System 2 thinking with AI. They see it as a way to move past the pitfalls of today’s LLMs, which are prone to hallucinations and lack robust reasoning and planning capabilities. Hence, OpenAI’s latest model release:

“We are introducing OpenAI o1, a new large language model trained with reinforcement learning to perform complex reasoning. o1 thinks before it answers — it can produce a long internal chain of thought before responding to the user.”

Chains of thought

Most people know that when you ask a large language model a question, it helps to ask it to ‘reason’ through its thinking. This is also known as chain of thought-prompting.

The short version of the story is that OpenAI has leveraged this technique by developing a new reinforcement learning algorithm trained on synthetic data of chain of thought reasoning. On top of that, during test time inference (which is when you prompt the model), o1 will generate large chains of thought on the fly (this happens in the background and is kept hidden from the user), before providing you with a final answer.

Both strategies shows a log-linear relationship between accuracy and compute spent according to internal tests by OpenAI. In other words, the longer they let it ‘think’, the more accurate it becomes.

Efficiency

Right now, the model decides on its own how long it spends on figuring out a problem, but during an AMA hour with the OpenAI o1 team, it was suggested that users might eventually get control over the thinking-time.

This has some interesting implications. If, going forward, models can spend more time on a problem, we can no longer reasonably compare the outputs between two different AI systems. This means that apart from accuracy, we’ll need to start testing for efficiency as well.

A benchmark that is already doing this is ARC-AGI, which recently announced a $1M Prize Pool for the first person who can score 85% on their private eval. In a recent blog post, the ARC team tested the new o1 model. It outperformed GPT-4o and appeared to be on par with Anthropic’s Claude 3.5 Sonnet — however, it took the model about 10X longer to achieve those results!

The blog post reads:

“o1’s performance increase did come with a time cost. It took 70 hours on the 400 public tasks compared to only 30 minutes for GPT-4o and Claude 3.5 Sonnet.”

ARC-AGI is a particularly difficult benchmark, because it tests for reasoning on novel problems. In comparison, o1 seems to do much better on benchmarks that involve math and coding, where chain of thought is effective at correcting mistakes and guiding the model through multiple stages of trail and error before generating the answer.

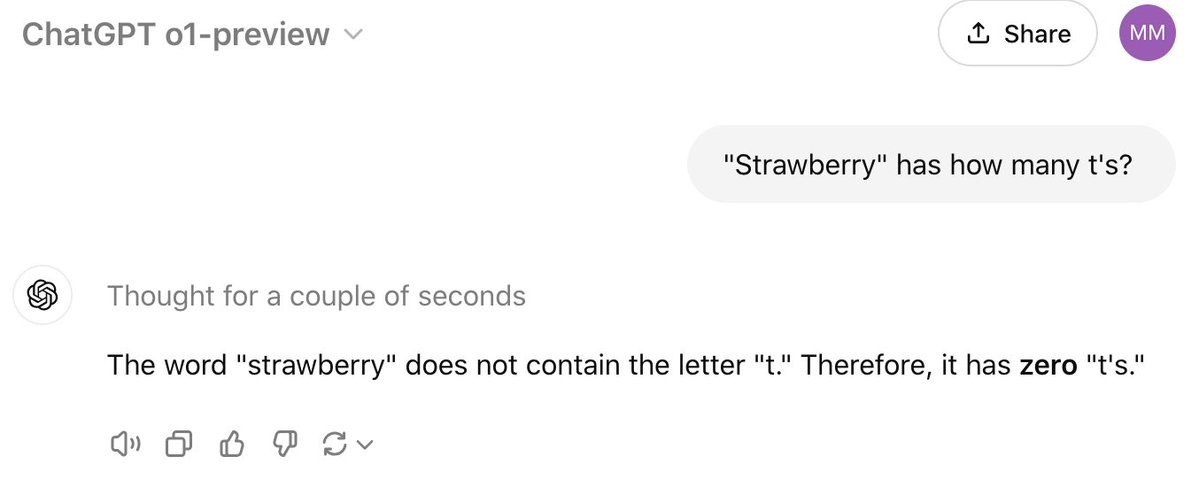

Unfortunately it can still also, just as easy, produce nonsensical outputs:

It might be too early to call, but it appears like we’ve replaced approximate retrieval with approximate reasoning.

The thinking-trap

The fact that o1 takes more time to work through a problem does fit the idea of a slower, more deliberative process. But although the concept of System 2 thinking serves as a great metaphor, we shouldn’t confuse how humans think with what the model is doing. Fundamentally, there isn’t much different going on under the hood. It is still processing and predicting tokens, but spending more of them (which also makes it exponentially more expensive). And it remains a static model with a hard knowledge cutoff.

While it performs better in some areas, it’s worse in others. Even OpenAI admits GPT-4o is still the best option for most prompts, which introduces another problem: how do you know what o1 is and isn’t good for?

In the meantime, we should probably refrain from over-anthropomorphizing these models to keep our understanding of their capabilities and limitations grounded in reality.

Like many of our all too human biases, anthropomorphizing is a thinking-trap that clouds our judgement. It may lead us to overtrust AI and voluntary suspension of disbelief, resorting to System 1 thinking when System 2 thinking is required. How beautifully ironic.

Peace out,

— Jurgen

Not done reading?

Here are some of my recent articles that you may have missed:

I wish you were writing for a major media outlet; as always most of them are regurgitating OpenAI's irresponsible hype. I'd have hoped more journalists would be taking what this company says with a grain of salt by now, but I can't find any stories in major outlets that describe "chain-of-thought" so plainly as you have here.

I just skimmed through the o1 "system card". It's part documentation, part press release, and very frustrating to read. OpenAI takes anthorpomorphizing to a whole new level; they simply refuse to recognize a distinction between what a word means in AI and what it means in plain language. They discuss "chain of thought" as though GPT-o1 is *actually* reporting its inner thoughts, which *actually* describe how it chose the output text it ultimately produced. No caveats, no details on what "CoT" is, just pure conflation of what the chatbot does and what a human does.

So, I very much appreciate this paragraph of yours:

"The fact that o1 takes more time to work through a problem does fit the idea of a slower, more deliberative process. But although the concept of System 2 thinking serves as a great metaphor, we shouldn’t confuse how humans think with what the model is doing. Fundamentally, there isn’t much different going on under the hood. It is still processing and predicting tokens, but spending more of them (which also makes it exponentially more expensive)."

Nowhere in the 43-page "system card" does OpenAI acknowledge this. There is no plain statement of how the model's "chain-of-thought", which they reference over and over, is actually produced. In what is presented as a technical document, they skip past the technical documentation and jump straight to describing GPT-o1 like it's a person. Not only is it "intelligent", not only does it "reason", it also has "beliefs", "intentions", and - no joke - "self-awareness"! (page 10, section 3.3.1)

I think this fits in well with your recent post about AGI as religion. It's probably easier to conflate human thinking and LLM "thinking" for those who believe the LLM is a precursor to AGI. For me, reading documents from OpenAI's website is like reading theology - it's tough to know what to take at face value. Do the people who write these documents genuinely believe that GPT-o1 is sharing with them its inner thoughts? Or, are they just being very liberal with their metaphors? Do they genuinely believe that the "reasoning abilities" of LLMs can be measured using instruments designed to measure human reasoning? Because this is what they report in the system card, and they don't address any of the criticism they got for doing the same thing in the GPT-4 system card. Do they genuinely believe that when chain-of-thought text contradicts output text, they are observing "deception"? Considering they distinguish "intentional" from "unintentional" chatbot deception, it sounds like they do.

Anyway, thanks as always for sharing your insights, they are a breath of fresh air.

Really resonated with that last comment from the post. I think kahneman with his work shows that when we are thinking we are doing system 2 thinking, it is just system 1 thinking masked as system 2 via our congnitive biases.