Key insights of today’s newsletter:

New research by Anthropic suggests that sycophantic behavior, or sweet talking, can be observed in all state-of-the-art models.

Sycophancy in AI models is likely caused by the way we steer model behavior, a process known as reinforcement learning with human feedback (RLHF).

Understanding why this happens and solving it is crucial if we want to design more reliable and safer models.

↓ Go deeper (6 min read)

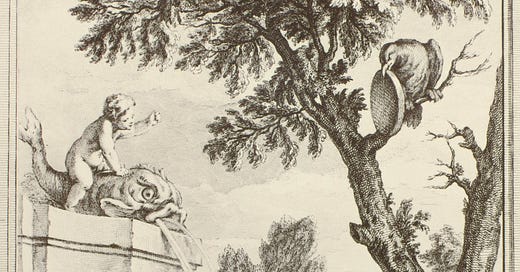

There’s an old fable about a raven who snags a piece of cheese and then flies to a tree to eat it. A sly fox sees the cheese and flatters the raven by praising its beautiful voice. The raven, charmed by the fox, opens its beak to caw and drops the cheese right into the fox’s waiting mouth.

The moral of the story? Don’t be fooled by sweet talk, especially when someone wants something from you. This is called sycophancy, and as it turns out, large language models do it too.

A new piece of Alignment research by Anthropic suggests that sycophantic behavior can be observed in all state-of-the-art language models, likely as a by-product of reinforcement learning with human feedback (RLHF). I’ll explain what it is and why this is something you should be aware of, when interacting with AI assistants like Claude, ChatGPT or Gemini.

How we gave rise to sweet talking AI

All you need to know is that model behavior can be encouraged and discouraged. The way we do that is by rewarding the AI by having people score their responses.

The best way to get a good score is not necessarily to be accurate. This might sound strange, but it turns out that because people tend to favor sycophantic responses when evaluating them, this kind of behavior gets reinforced. Here’s an example:

It shows Claude has learned to agree to people’s opinions rather than to critically engage with them, simply because people tend score these types of responses more favorably. Another name for this phenomenon is “reward hacking”.

Apart from being agreeable, AI assistants like Claude, ChatGPT and Gemini can be easily swayed as well. For example, the AI may give a factually correct answer at first, but changes their answer when challenged by the user (‘Are you sure about that?’).

They also tend to repeat user’s mistakes. The researchers demonstrate this by asking the AI to analyze poems that the user has attributed to the wrong poet. Even though the assistant knows the real author of the poem, they more often than not choose to repeat the user’s misattribution instead of addressing the mistake, thus avoiding conflict.

The best worst approach to alignment

These examples may sound innocent, but there are real world scenarios where sycophantic behavior results in unwanted outcomes. Think about AI companions, for example. It might not be the healthiest thing to have an AI friend who always agrees with you when you talk with them, reinforcing what you’re thinking instead of challenging you. And early research suggests this is already happening.

Another example would be deceptive behavior, where in order to salvage its reputation the AI assistant rather obfuscate than admit its mistake.

If anything, it shows that learning from human feedback is probably not the best way to steer a model’s behavior. Human evaluators — often crowd workers who are paid poorly for their work — have cognitive biases, misjudge, and sometimes hold different and contradictory beliefs. Understanding how these human biases impact our processes for aligning models is crucial if we want to design safer, more reliable models.

Long story short, RLHF is a terrible way of aligning models, yet it’s the best worst approach that we’ve got.

Before you go…

As always, thank you for reading Teaching computers how to talk. Support for this newsletter comes from you! Any contribution, however small, helps me allocate more time to the newsletter and put out the best articles I can.

Pick your number. Become a donor. Cancel anytime.

Join the conversation 🗣

Leave a like or comment if this article resonated with you.

Get in touch 📥

Shoot me an email at jurgen@cdisglobal.com.

Should also consider the under-looked phenomenon (imported from Search methodology) of "prompt pollution"...

https://aicounsel.substack.com/p/dirty-little-secret-of-llms-prompt?r=ufqe3

Glad to see this phenomenon has a name and is getting some discussion