Key insights of today’s newsletter:

Meta open-sourced Llama 3, their newest generation of large language models, powering their own Meta AI assistant.

Open sourcing their models is part of a very deliberate, strategic playbook challenging the status quo of AI companies like OpenAI and Anthropic selling inference.

Meta’s apps — WhatsApp, Instagram, Facebook — also provide the company with a unparalleled distribution opportunity for their own smart, integrated assistant.

↓ Go deeper (11 min read)

Last week, Meta open-sourced Llama 3, their newest generation of large language models, with 8B and 70B parameters respectively. The reception has been overwhelmingly positive.

In their blog post, Meta announced an even bigger, 400B parameters model still undergoing training and mentions the release of even more models with new capabilities including multimodality, more languages, longer context windows, and stronger overall capabilities. A detailed research paper is expected to be published soon, too.

If that wasn’t enough already, Meta also launched a dedicated website, which looks like a copy-paste of the ChatGPT interface (why invent if you can steal?) and it started to roll out its Meta AI assistant globally:

“Built with Meta Llama 3, Meta AI is one of the world’s leading AI assistants, already on your phone, in your pocket for free. And it’s starting to go global with more features. You can use Meta AI on Facebook, Instagram, WhatsApp and Messenger to get things done, learn, create and connect with the things that matter to you. We first announced Meta AI at last year’s Connect, and now, more people around the world can interact with it in more ways than ever before.

We’re rolling out Meta AI in English in more than a dozen countries outside of the US. Now, people will have access to Meta AI in Australia, Canada, Ghana, Jamaica, Malawi, New Zealand, Nigeria, Pakistan, Singapore, South Africa, Uganda, Zambia and Zimbabwe — and we’re just getting started.”

It gives one pause to think why they doing what they’re doing. Why are they open-sourcing their AI models, effectively giving them away for free? Why are they developing their own models in the first place?

The answer is that Meta is following a very deliberate, strategic playbook — and it’s starting to pay dividends.

Why Meta develops its own models

On 18th of April, Mark Zuckerberg sat down with Dwarkesh Patel, a rising star and regarded as ‘the new Lex Fridman’, for an in-depth interview. He explained that for Meta the model, the AI itself, is not the product.

Meta, formerly known as Facebook, is not a foundational model company like OpenAI, Anthropic or Cohere. And it has no intention to become one. Meta primarily earns revenue by selling advertising on its social media platforms. It runs an ads business and ads businesses are about harvesting eye balls. You are the product.

The past year, AI has shown it can capture people’s attention. Thus integrating AI into Meta’s products makes a lot of sense. As

from puts it: “Meta’s apps provide the broadest distribution for an assistant of any global company, with four billion monthly active users”. It adds value to existing products and helps winning new souls. However, if Meta were to rely on a third-party for that, the costs of that would be astronomical and it would create undesirable dependencies. It’s for that reason they decided to develop their models in-house.But, why then give your models away for free? Well, believe it or not, it’s a test-and-tried strategy in order to become the industry standard. Just like Google open-sourced Android, fifteen years ago, in an successful attempt to catch up with Apple’s iPhone to become the dominant player in the mobile industry, Meta is calling the market’s bluff.

Every company trying to create models and sell inference — OpenAI, Google, Microsoft, Amazon, Cohere, Anthropic etc. — is effectively running a lost race. “Your product isn’t a product,” is what Meta’s saying, by forcing them to compete with something which is obviously very capable, freely available, and can be customized to one’s heart’s desire.

What Meta is getting out of this

Giving today’s tech leaders a big FU is a surprisingly good look for Zuckerberg, who at the same time is spearheading a company that is keenly aware it is causing kids significant physical and mental harm and not too long ago publicly apologized to those families, whilst failing to admit to any wrongdoing, during a hearing in the US Senate.

The reality is that Meta is not doing this because of some newfound idealism. It’s business strategy. Not only will it most likely prove effective in stifling its competition, there’s several other strategic benefits to open sourcing that all lead to Meta coming out on top when all dust settles down:

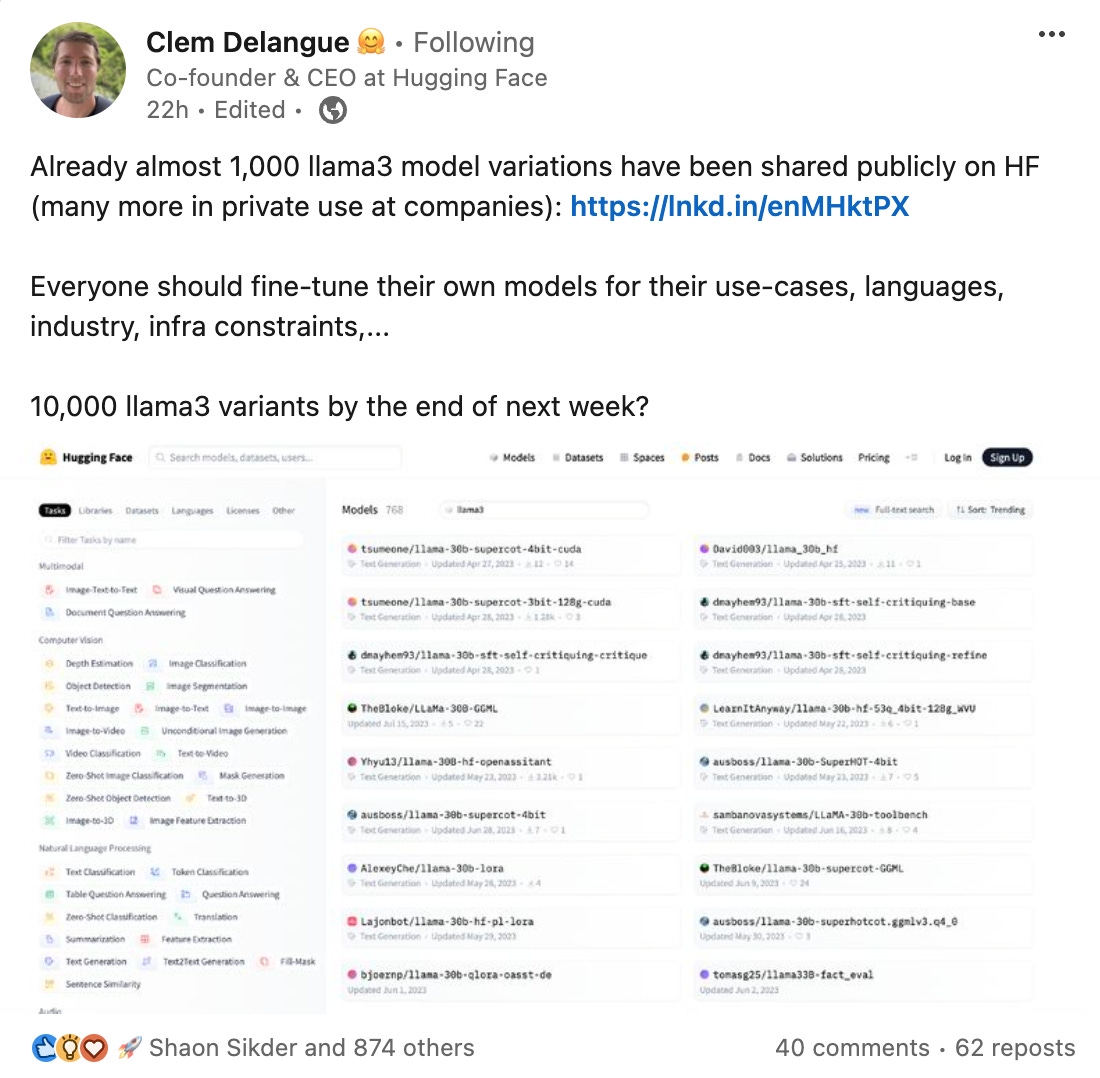

Open sourcing software is a way of outsourcing innovation to a wide audience of researchers and developers. Over time, Meta’s Llama models may well become the industry standard, while Meta retains the ability to learn and incorporate anything of value into their own products, whenever they feel like.

Open source is hugely popular with developers and researchers, and as a result of that it helps Meta attract and recruit the best people, which is a big deal. The war in AI is fought on many fronts. To succeed in AI you need data, compute and talent. All three are scarce at the moment. So being able access a pool of highly talented people that are already very comfortable working with your platform is precious.

And lastly, security. While this may be a contentious point, typically open source software is more secure, because of the community effect. In simple terms, more people are inspecting the code which enables more issues to be found, quicker.

I say contentious because open sourcing LLMs is a knife that cuts both ways. A paper published last month, titled BadLlama: cheaply removing safety fine-tuning from Llama 2-Chat 13B, demonstrated:

“… that it is possible to effectively undo the safety fine-tuning from Llama 2-Chat 13B with less than $200, while retaining its general capabilities. Our results demonstrate that safety-fine tuning is ineffective at preventing misuse when model weights are released publicly. Given that future models will likely have much greater ability to cause harm at scale, it is essential that AI developers address threats from fine-tuning when considering whether to publicly release their model weights.”

Security has been the biggest argument against open-sourcing from competitors like OpenAI. Of course, they know that we know that they are not acting selflessly here either.

The endgame

Meta seems to be comfortable releasing their 400B parameter-sized Llama model, including model weights, regardless. It’s a model that is expected to be as capable as GPT-4, and just like the previous generations, it will be stripped of its guardrails quicker than Sam Altman can say AGI.

Asked about this by Dwarkesh Patel, during the podcast, Zuckerberg said:

“I don’t think anyone should be dogmatic about how they plan to develop it or what they plan to do. You want to look at it with each release. (…) If at some point however there is some qualitative change in what the thing is capable of, and we feel it is not responsible to open source it, then we won’t.”

It’s unclear where Zuckerberg will eventually draw a line in the sand.

What is clear, however, is that Meta is well-positioned, uniquely positioned I would say, to capitalize on its open-source playbook. It has the data, the compute, and the talent. It will try to suck dry the competition and lavishly sprinkle their products and services with the fairy dust that is AI. They have our attention.

Join the conversation 🗣

Leave a comment or like this article if it resonated with you.

Get in touch 📥

Shoot me an email at jurgen@cdisglobal.com.