Here’s what you need to know:

ARC-AGI is a benchmark designed to measure AI’s ability to acquire skills and progress towards human-level intelligence.

The benchmark received global attention after a 1 million dollar prize pool was announced by Mike Knoop, François Chollet, and Lab42.

The interesting about ARC that the test questions are deceptively simple: a five-year old could solve them. Yet, no AI has been up to task.

↓ Go deeper (10 min read)

This article was originally published as a guest contribution on AI Supremacy, one of the top publications on Substack. AI Supremacy captures state-of-the-industry insights in artificial intelligence, technology and business.

Artificial general intelligence (AGI) progress has stalled. New ideas are needed. That’s the premise of ARC-AGI, an AI benchmark that has garnered worldwide attention after Mike Knoop, François Chollet, and Lab42 announced a 1.000.000 dollar prize pool.

ARC-AGI stands for “Abstraction and Reasoning Corpus for Artificial General Intelligence” and is aimed to measure the efficiency of AI skill-acquisition on unknown tasks. François Chollet, the creator of ARC-AGI, is a deep learning veteran. He’s the creator of Keras, an open-source deep learning library adopted by over 2.5M developers worldwide, and works as an AI researcher at Google.

The ARC benchmark isn’t something new. It has actually been around for a while, five years to be exact. And here comes the crazy part, since its introduction in 2019, it hasn’t been solved.

What makes ARC so hard for AI to solve?

Now I know what you’re thinking, if AI can’t pass the test, this ARC-thing must be pretty hard. Turns out, most of its puzzles can be solved by a 5-year old.

The benchmark was explicitly designed to compare artificial intelligence with human intelligence. It doesn’t rely on acquired or cultural knowledge. Instead, the puzzles (for lack of a better word) require ‘core knowledge’. These are things that we as humans naturally understand about the world from a very young age.

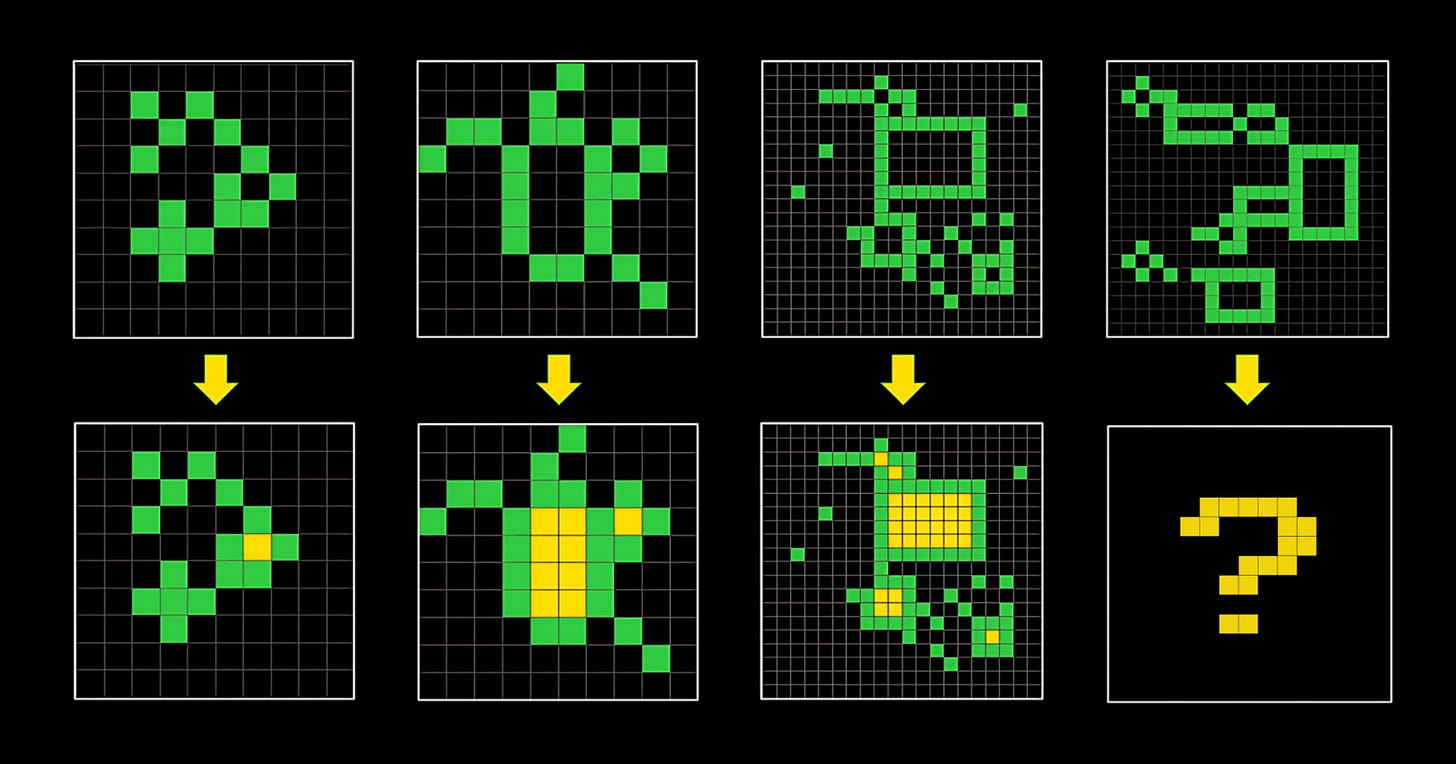

Here are a few examples:

Objectness

Objects persist and cannot appear or disappear without reason. Objects can interact or not depending on the circumstances.Goal-directedness

Objects can be animate or inanimate. Some objects are “agents” — they have intentions and they pursue goals.Numbers & counting

Objects can be counted or sorted by their shape, appearance, or movement using basic mathematics like addition, subtraction, and comparison.Basic geometry & topology

Objects can be shapes like rectangles, triangles, and circles which can be mirrored, rotated, translated, deformed, combined, repeated, etc. Differences in distances can be detected.

As children, we learn experimentally. We learn by interacting with the world, often through play, and that which we come to understand intuitively, we apply to novel situations.

But wait, didn’t ChatGPT pass the bar exam?

Now, you might be under the impression that AI is pretty smart already. With every test it passes — whether it is a medical, law, or business school exam — it strengthens the idea that these systems are intellectually outclassing us.

If you believe the benchmarks, AI is well on its way to outperforming humans on a wide range of tasks. Surely it can solve this ARC-test, no?

To answer that question, we should take a closer look at how AI manages to pass these tests.

Language models have the ability to store a lot of information in their parameters, so they tend to perform well when they can rely on stored knowledge rather than reasoning. They are so good at storing knowledge that sometimes they even regurgitate training data verbatim, as evidenced by the court case brought against OpenAI by The New York Times.

So when it was reported that GPT-4 passed the bar exam and the US medical licensing exam, the question we should ask ourselves is: could it have simply memorized the answers? We can’t check if that is the case, because we don’t know what is in the training data, since few AI companies disclose this kind of information.

This is commonly known as the contamination problem. And it is for this reason that researchers like , , and others have called evaluating LLMs a minefield.

ARC does things differently. The test itself doesn’t rely on knowledge in the sense of facts and figures, thus not relying on the stored knowledge in the model. Instead, the benchmark consists exclusively of visual puzzles that are pretty obvious to solve (for humans, at least).

To tackle the problem of contamination, ARC uses a private evaluation set. This is done to ensure that the test itself doesn’t become part of the data that the AI is trained on. Participants also need to open source the solution and publish a paper outlining what you’ve done to solve it in order to be eligible for the prize money.

This rule does two things:

It forces transparency making it harder to cheat.

It promotes open research. While strong market incentives have pushed companies to go closed source (with OpenAI setting the example and others following suit), it didn’t used to be like that. ARC was created in the spirit of the days when AI research was still done in the open.

Are we getting closer to AGI?

ARC’s prize money is awarded to the team, or teams, that score at least 85% on the private evaluation during an annual competition period. This year’s competition runs until November 10, 2024, and if no one claims the grand prize, it will continue during the next annual competition. Thus far no AI has been up to the task.

According to Chollet, progress toward AGI has stalled. While LLMs are trained on unimaginably vast amounts of data, they remain brittle reasoners and are unable to adapt to simple problems they haven’t been trained on. Despite that, capital keeps pouring in, in the hope these capabilities will somehow emerge from scaling our current approach. Chollet, and others with him, have argued this is unlikely.

To promote the launch of the ARC Challenge, Chollet and Knoop were interviewed by Dwarkesh Patel. During that interview, Chollet said something that stuck with me: “Intelligence is what you use when you don’t know what to do.” It’s a quote that belongs to Jean Piaget, a Swiss psychologist who has written a lot about cognitive development in children.

The simple nature of the ARC puzzles is what makes it so powerful. Most AI benchmarks measure skill. But skill is not intelligence. General intelligence is the ability to efficiently acquire new skills. And the fact that ARC remains unbeaten speaks to its resilience. Oh, and to those who think that solving ARC equals solving AGI…

Looking to test your own intelligence on the ARC benchmark? You can play here.

Before you go…

Did you know you can supprt this newsletter via donations? Any contribution, however small, helps me allocate more time to the newsletter and put out the best articles I can.

Become a donor. Cancel anytime.

I strongly recommend the conversation between Tim Scarfe (Machine Learning Street Talk, Chollet/ARC fan for years) and Ryan Greenblatt (Redwood researcher.)

https://podcasters.spotify.com/pod/show/machinelearningstreettalk/episodes/Ryan-Greenblatt---Solving-ARC-with-GPT4o-e2lnplq

"But skill is not intelligence." is a crucial insight. Great write-up of an important figure in AI. I caught Chollet on Sean Carroll's Mindscape podcast, which I think is a good introduction.