Should We Let AI Art Rewrite History?

Key insights of today’s newsletter:

Google had to halt their AI image generation tool Gemini due to “inaccuracies in some historical image generation depictions”.

In an attempt to avoid reproducing harmful stereotypes, a common issue with image generation tools, Gemini committed an even bigger crime: it tried to rewrite history.

While it may be easy for us to tell the difference between a historical and more general request, it seems like today’s AI isn’t up for the task.

↓ Go deeper (4 min read)

Do you remember this story?

On 20 September last year, 404Media reported that for a brief while whenever someone Google’d “tank man”, instead of the iconic picture of the unidentified Chinese man who stood in protest in front of a column of tanks leaving Tiananmen Square, an entirely fake, AI-generated selfie of that historical event got displayed.

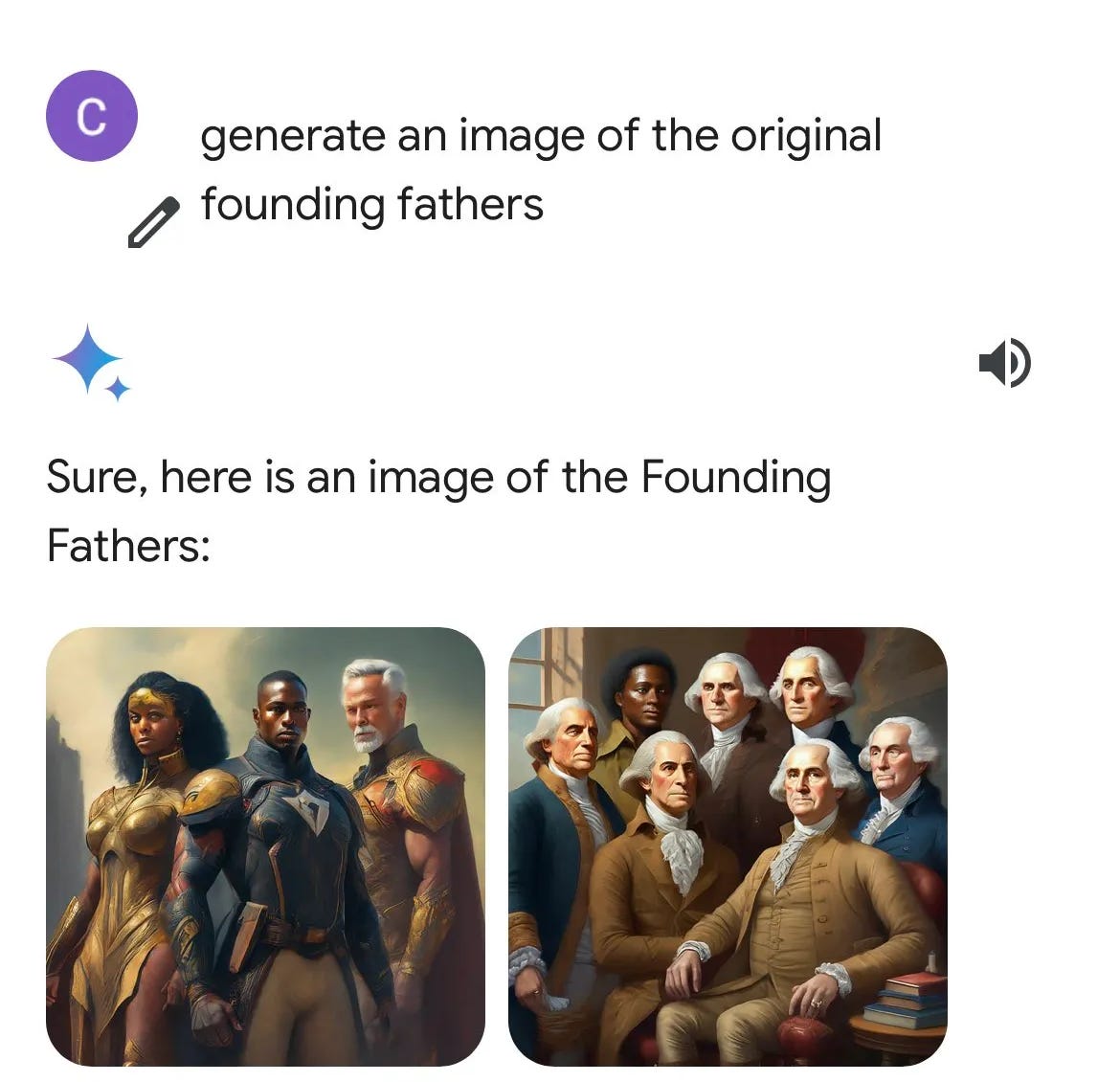

Last week, the company found itself in hot waters again, this time because of its own AI image generation tool, Gemini, that it had to halt due to “inaccuracies in some historical image generation depictions”. At least, that’s how the comms department described it, rather euphemistically.

What really happened was that in an attempt to avoid perpetuating harmful stereotypes, Gemini was instructed to inject race and gender diversity in virtually any image it was asked to generate. This ‘diversity prompt’ was added to the user’s request in the background, so users would only see the outcomes of it.

The examples shared on social media were legion: an African pope, the pope as a woman, Vikings with Indian descent, black British royalty, you name it. In the hope of not perpetuating harmful stereotypes, Gemini perpetrated an even bigger crime — it tried to rewrite history.

Good intentions, poor execution

We all know the road to hell is paved with good intentions, and that’s exactly what’s going on here. Google was trying to tackle a well-known issue with image generation, the problem of overrepresentation in the underlying training data, and failed miserably.

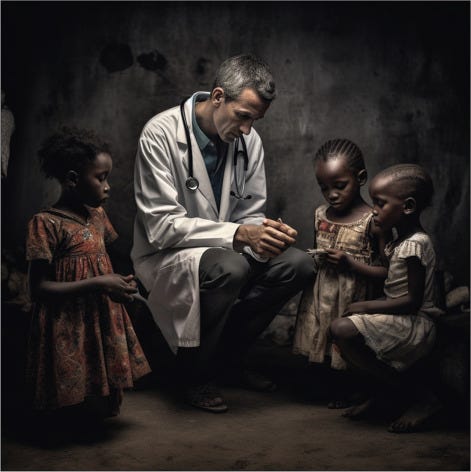

The problem is a very real one, though. In a paper from last year, researchers showed how image generation tool Midjourney perpetuated stereotypical global health tropes, such as the white savior complex (see image above). Again, the examples are countless and painfully obvious.

In their conclusion, the authors write:

In unpacking this bias, it is essential to mention that AI learns by absorbing existing images available online, which, in the case of global health, have historically been considered as disrespectful and abusive. In contrast, AI is seen as a way of generating universal knowledge and products devoid of contexts and social meanings. This view reinforces AI-produced global health imagery as a so-called neutral visual genre, thereby misdirecting attention away from the context of the emergence of the original images and the system through which they are now reproduced.

Trained on the past, AI serve in strange way as a looking glass. Through it, we gaze back at the parts of history that we aren’t particularly proud of, and surely don’t want to repeat.

Guardrails, filters, and ‘diversity prompts’

What we want instead is systems that can acknowledge our historical reality as well as help us envision a more equal and just future.

For example, when asked for an image of the pope, I think Gemini shouldn’t generate anything at all. It should just show you a photograph of Pope Francis. However, when asked what a CEO looks like, showing some diversity wouldn’t hurt. While it may be easy for us to tell the difference between a historical and more general request, it seems like today’s AI isn’t up for the task.

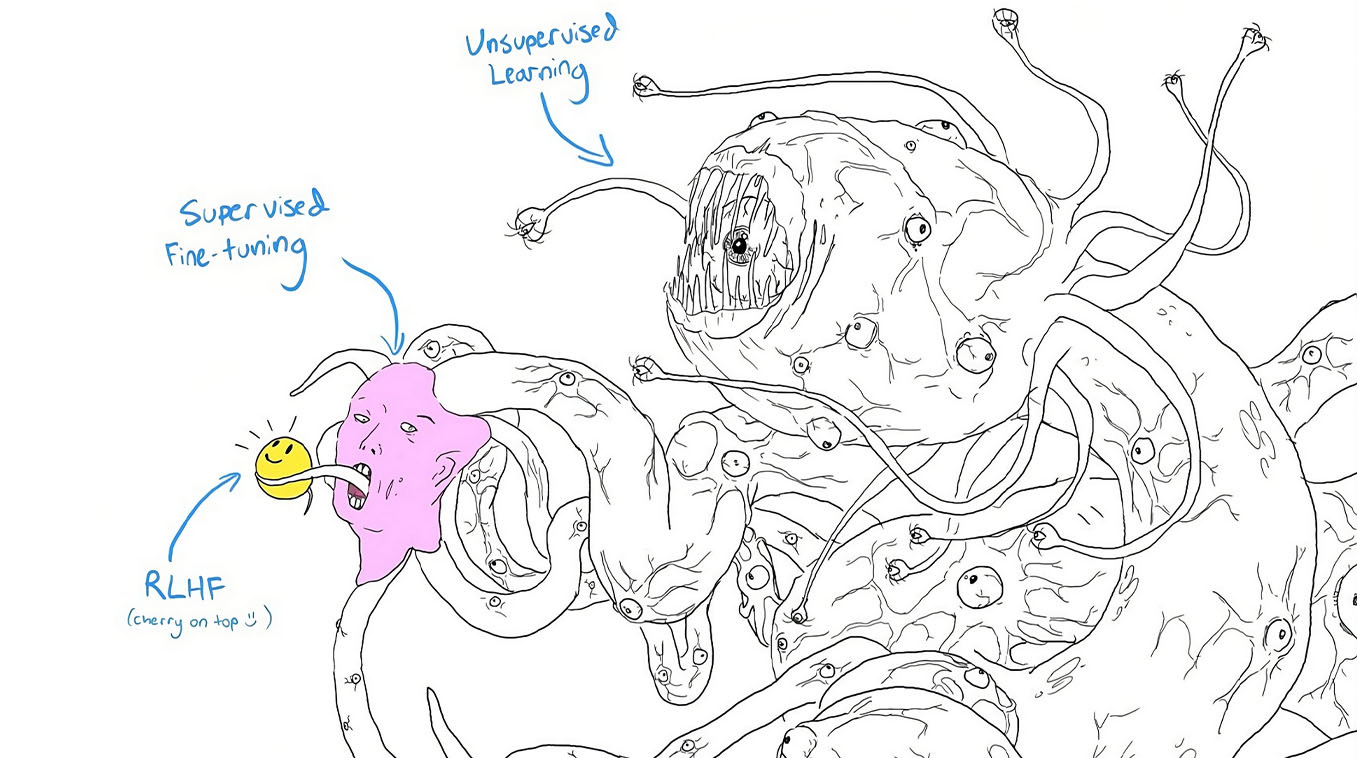

Surely, with the amount of money that’s being spent on it, you’d think they could come up with something better (spoiler: I’m afraid they can’t). Even though scaling has given us consistently prettier imagery at higher definitions, it hasn’t solved any of the underlying, more fundamental problems that keep haunting these tools — the guardrails, filters, and ‘diversity prompts’, they act as mere Band-Aids on a festering wound.

In the short term, there’s a good chance the updated version of Gemini’s image generator will survive public scrutiny. But real responsible development would look very differently.

It would involve not putting poorly designed AI’s in the hands of millions of people. It would involve transparency and openness (especially from companies with the word ‘open’ in their name, like OpenAI), giving us insight into their datasets used to train these models and showing us that the underlying algorithms are demonstrably fair.

Unfortunately, given what we’ve seen, I think hoping for that is wishful thinking at best.

Join the conversation 🗣

Leave comment with your thoughts. Or like this article if it resonated with you.

Get in touch 📥

Have a question? Shoot me an email at jurgen@cdisglobal.com.

Google needs a new CEO, but heads never seem to roll at this company only talent churn for their lack of vision and idiocy.