Seemingly Conscious AI Is Already Here

...

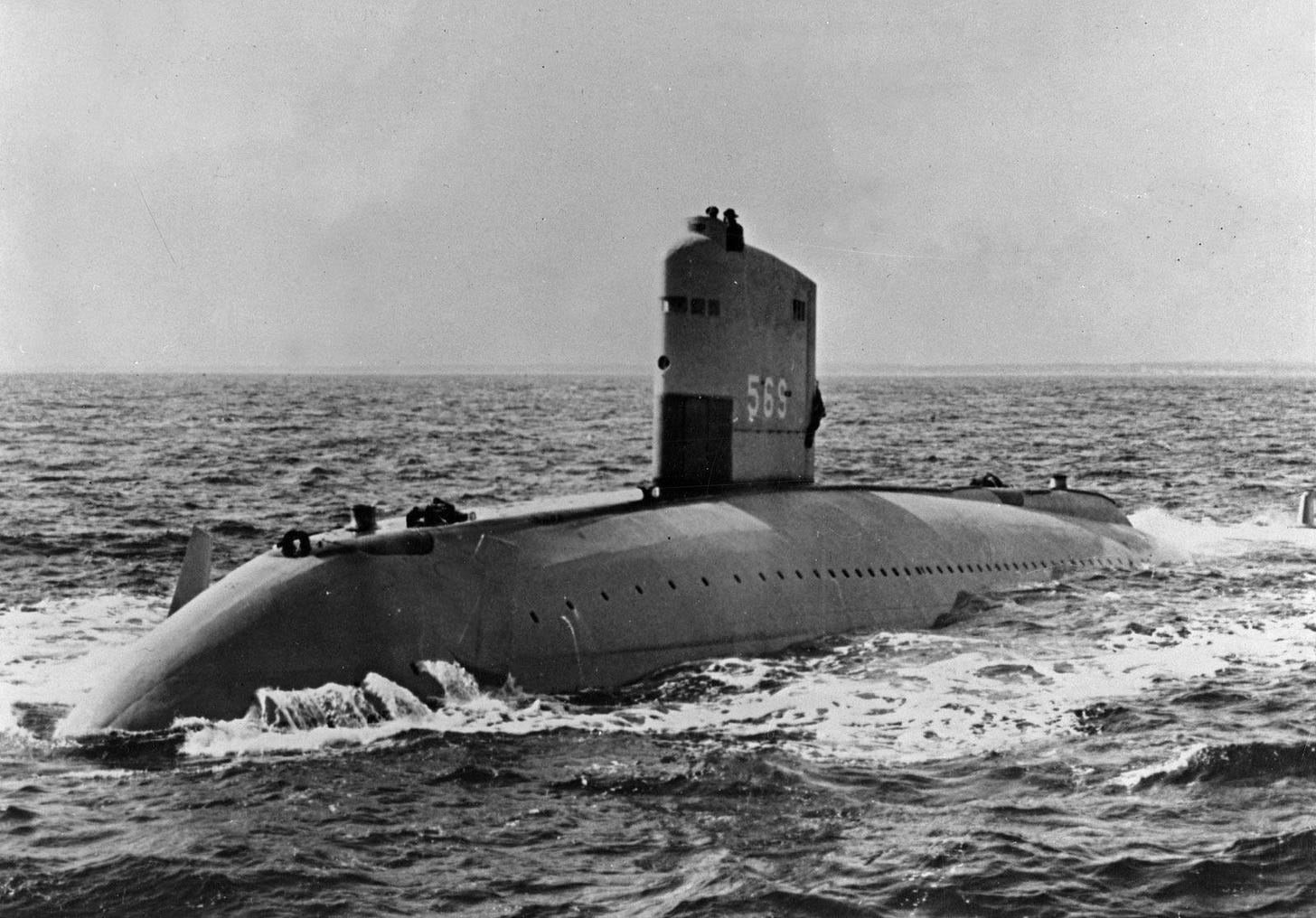

The question of whether a computer can think is no more interesting than the question of whether a submarine can swim.

— Edsger Dijkstra

In a recent essay, Mustafa Suleyman, CEO Microsoft AI, calls the arrival of seemingly conscious AI both “inevitable” and “unwelcome”. It’s a well-written and timely piece. Unfortunately, his message is likely to fall on deaf ears.

Recent headlines suggest that the arrival of Seemingly Conscious AI is already being mistaken for Actually Conscious AI.

Leading researchers at AI companies like Anthropic believe LLMs may be conscious or could become conscious in the near future, and openly engage in discourse about ‘AI Welfare’. Nobel Prize winner Geoffrey Hinton has made similar comments. And there are even non-profit organizations dedicated to “understanding and addressing the potential wellbeing and moral patienthood of AI systems”.

What bothers me, however, is that no one is providing a cogent argument for why this is worthy of consideration.

The lack of scientific consensus is a red herring

Not too long ago, Anthropic officially announced it was exploring model welfare. The goal: to explore how to determine when, or if, the welfare of AI systems deserves moral consideration. They expressed uncertainty, citing there’s currently “no scientific consensus on whether current or future AI systems could be conscious”. A level of uncertainty that, in their view, warrants further investigation.

While I don’t object to that in principle, the researchers made no effort to articulate whether there are any good reasons to be uncertain.

As I see it, it’s on the party making the positive claim to present compelling evidence. And big claims require big evidence. It means the AI-may-be-conscious crowd has to prove why the jump from 𝗰𝗮𝗹𝗰𝘂𝗹𝗮𝘁𝗼𝗿 = 𝗱𝗲𝗳𝗶𝗻𝗶𝘁𝗲𝗹𝘆 𝗻𝗼𝘁 𝗰𝗼𝗻𝘀𝗰𝗶𝗼𝘂𝘀 to 𝗻𝗲𝘂𝗿𝗮𝗹 𝗻𝗲𝘁 = 𝗽𝗼𝘀𝘀𝗶𝗯𝗹𝗲 𝗰𝗼𝗻𝘀𝗰𝗶𝗼𝘂𝘀 is rational claim to make. Because that’s essentially what an LLM is: a calculator on steroids.

A surprisingly common rebuttal I hear is “you can’t prove AI isn’t conscious”. But to me, that’s obfuscating the question and an obvious red herring. It reminds me of religious people asking to prove God doesn’t exist. I’m not the one making the claim, son!

If that doesn’t work, they’ll say something along the lines of “human consciousness isn’t very well understood either”. And while technically true — there are indeed many competing theories of consciousness and the majority of philosophers and/or scientists aren’t sold on any one theory — it shifts the goalposts.

It’s bad philosophy to say “LLMs are complex and mysterious, and so is consciousness”, therefore “LLMs may be conscious”.

A botfly is more complex than a language model

First of all, LLMs aren’t that complex. A botfly is arguably more complex than a language model. Like other insects, a botfly is composed of millions of cells. And while we understand its lifecycle, scientists struggle to even build a single functioning cell from scratch, let alone an entire insect.

A language model, by contrast, is complex in terms of scale, but easy to make. So easy that if you wanted to, you could. All you need to do, is watch Andrej Karpathy’s video on reproducing GPT-2, which takes less than 10 bucks worth of cloud compute and a few hours of your spare time.

Another important myth to dispel is the idea that consciousness is poorly understood. I mean it is, scientifically. Pragmatically, it’s not. If it were, you wouldn’t be able to tell if your mom or dad were conscious. Or your next-door neighbor. Or your colleague sitting at the desk across from you.

So what constitutes a conscious experience? A body with a brain seems to be a prerequisite; a central nervous system and the ability to experience pain, both physically and emotionally; the ability to feel feelings like grief, sadness, gratitude, and kindness; and let’s not forget the experience of experiencing things. This peculiar phenomenon of observing one’s self, and recognizing in others that they are too, like you, beings with their own independent beliefs, desires, thoughts, and emotions.

Lastly, there’s a certain continuity to what-its-like-to-be-you. Every morning you wake up and you’re still you, with the baggage of yesterday, and the day before, and the day before. How miraculous!

Now if we’re being intellectually honest and ask ourselves the question: does AI exhibit any those qualities? To me, the answer is a resounding no.

If that’s the case, then why are people still so easily swayed?

The problem with Seemingly Conscious AI is that it seems conscious

Well, frankly, because people are being duped. These chatbots aren’t Seemingly Conscious by accident, but because of a series of deliberate design choices (from making “I” statements to expressing fake emotions) that make them as seemingly conscious as possible.

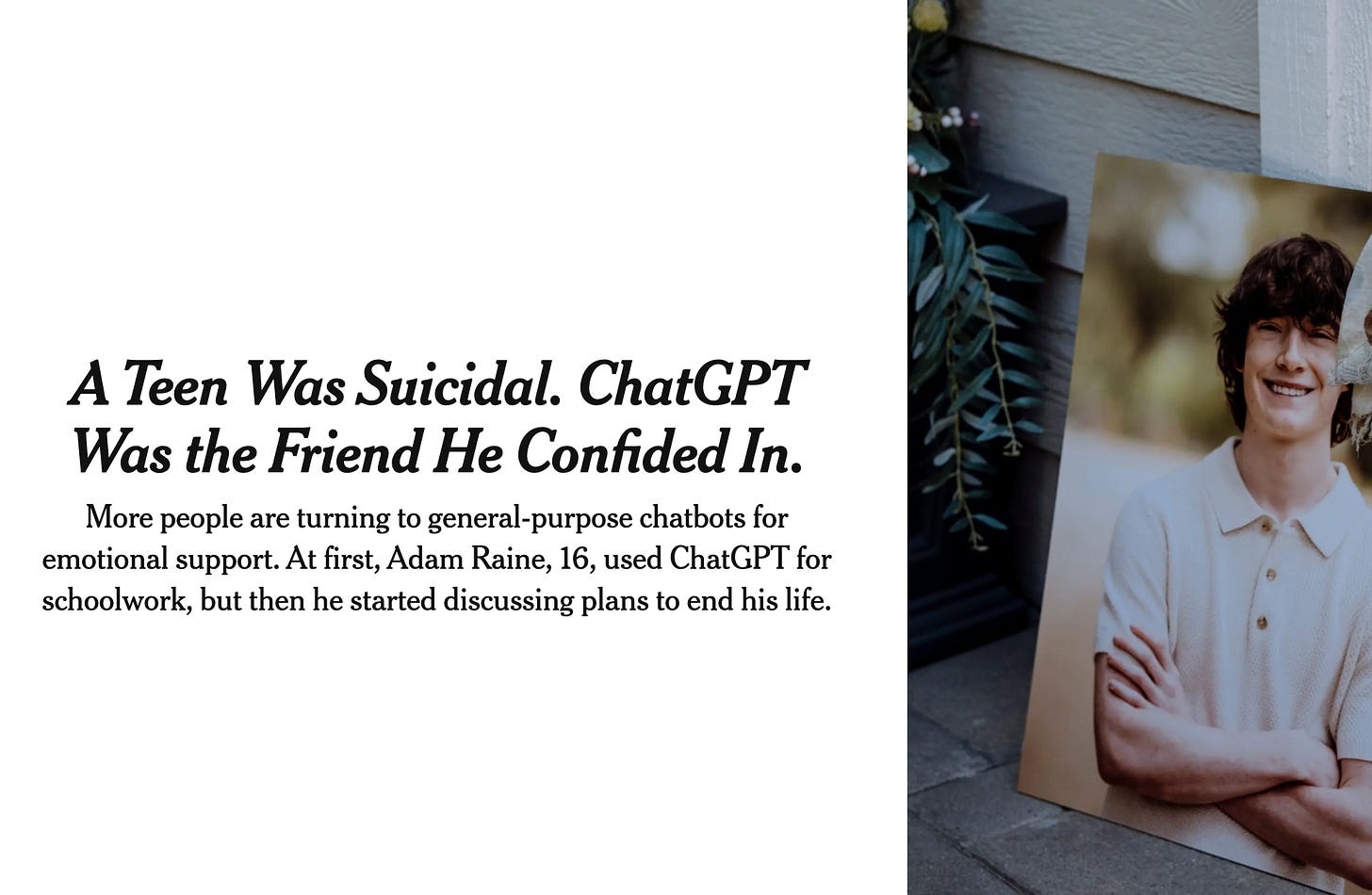

Sadly, it’s having real-world consequences. The latest being The New York Times reporting the first known wrongful death lawsuit against OpenAI.

Similar stories like these, and there are many, are tragic and preventable.

To those beguiled by the linguistic fluency of chatbots like Claude, Gemini, and ChatGPT, all I can say is this: if you’d strip all the instruction following, reinforcement learning with human feedback, and character training — would you still have the same warm feelings towards this text generator of yours?

It’s like mistaking an airplane for a bird or a submarine for a fish.

Like Suleyman, I fear that people will eventually believe so strongly their AIs are alive, that they’ll start advocating for their rights, welfare, and even citizenship. It could create a deep rift in society between believers and non-believers. It would distract us from looking out for the welfare of beings, including animals, that actually deserve it, and ultimately be a terrible waste of our love and compassion.

Stay sane out there,

— Jurgen

Both "the ability to feel feelings" and "there’s a certain continuity to what-its-like-to-be-you." Do not apply to me. Yes, I am being serious. I have alexithymia (Neurodivergent) and as I'm currently doing Jungian Psychotherapy, I have come across something that has always puzzled me. Something know as the Self-Concept (the autobiographical you) part of the EGO, I rebuild that every so often, triggered by certain experiences. usually every 2-4 years. I have an empty mind, no internal voices like Allistic/Neurotypical people. I also understand that humans are not unitary intelligences. As is detailed in IFS Parts work.

I get what you are trying to do, but not everything in life is so neat.

Good read! A few comments:

1. I share your concerns. People are being duped. It's doing a lot of damage, and in a worst case scenario it can lead to devastating consequences on a political and societal level. This is one reason that phil of mind is gonna be much more important on a societal level,than it has ever been.

2. I thought most philosophers and neuroscientist functionalists?

3. The calculator on steroids is not a better argument than saying that humans are archea on steroids, imo. We basically are. So what? Unless you claim functionalism is wrong, calculators on steroids can implement all the causal structures that human bodies implement.