Why Prompt Engineering Has Little To Do With Engineering

Key insights of today’s newsletter:

Large language models are weird. Even when given precise instructions, LLMs seem to have a mind of their own.

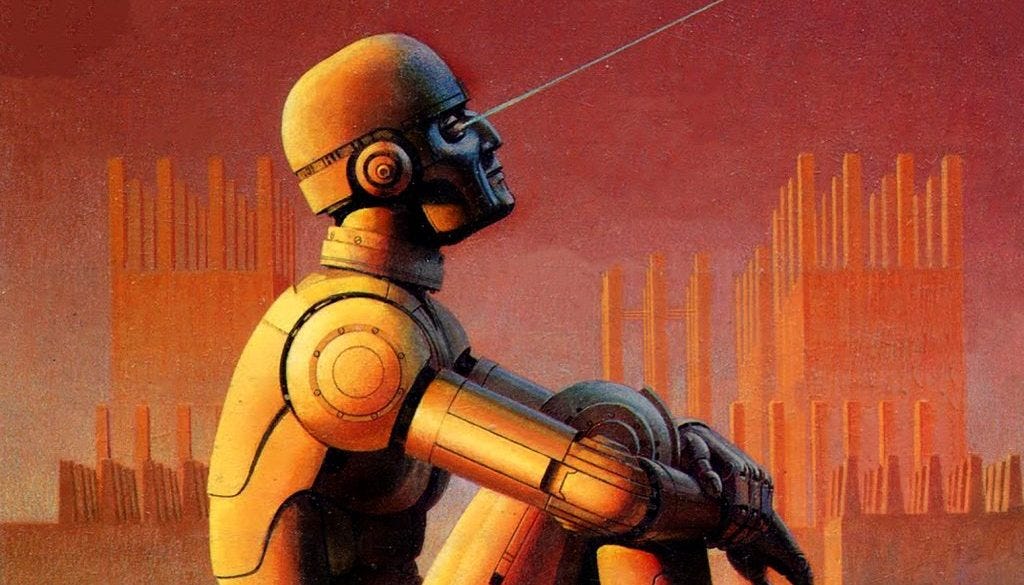

The way these models misinterpret their instructions, or prompts, in unforeseen ways is surprisingly similar to the robots in Isaac Asimov’s sci-fi short stories.

Portrayed as fallible, imperfect and idiosyncratic creatures, I propose we view LLMs in a similar way. Working with them is a lot less like engineering and a lot more like coaxing a teenager to follow the rules.

↓ Go deeper (5 min read)

Large language models are strange creatures. They behave more like beings than like things.

To give you a few examples: you can improve the output of LLMs by offering them a $100 tip (link), suggesting to take a deep breath (link), thinking step-by-step (link), appealing to emotion, which I wrote a more detailed analysis about in one of my previous articles (link), and lastly, simply by asking politely (link). Isn’t that wild?

Working with these models can be frustrating. Even if you give them precise instructions they still seem to have a mind of their own. Just look at the recent fallout around Google’s Gemini — even the smartest folks, working at the most advanced AI companies in the world, struggle to get it right.

Why prompt engineering is a misleading term

A good friend of mine, who hosts a podcast on Social Innovation, responded to my recent coverage of that story, saying I failed to provide more than the surface level critique he read elsewhere. Upon review, I think he was right. In the end, it wasn’t about Google. In the sense that it didn’t really matter that it was them making the mistake or that other models, like Meta’s Imagine, suffer from the same ills.

No, what the incident demonstrated was how a simple set of instructions passed along to the AI, when executed, led to outcomes nobody intended nor anticipated.

At the heart of it lies this thing we call prompt engineering, which I have to say is a bit of a misleading term. For those unfamiliar with the practice, instructing a model actually doesn’t require you to write any code at all. You just pen down what you want it to do in natural language, in the hope of steering the model towards desired behavior and steering it away from undesired behavior. It’s a lot less like engineering and a lot more like convincing a stubborn teenager to follow the rules.

![50/50] Short Stories #10 & #11: Isaac Asimov | Nostalgia in the Time of Machines 50/50] Short Stories #10 & #11: Isaac Asimov | Nostalgia in the Time of Machines](https://substackcdn.com/image/fetch/$s_!Xw0G!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F7ec4aa5a-bbcc-4408-84db-94bdb3ab33b7_695x1023.jpeg)

One is reminded of the old robot stories of Isaac Asimov (one of the greatest science-fiction writers who ever lived), who coined the Three Laws of Robotics:

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

In Asimov’s fictional universe, these robots would often act out or go AWOL, and then Dr. Susan Calvin, chief robopsychologist for US Robots and Mechanical Men Inc., had to come in and investigate.

The moral of the story was almost always that there was nothing wrong with the robots. Most of the time, they had dutifully followed their instructions, but interpreted their instructions in a way that humans failed to comprehend or simply hadn’t anticipated.

Sound familiar?

“Sizzling Saturn, we’ve got a lunatic robot on our hands.”

― Isaac Asimov, I, Robot

What should we take away from this?

Looking back, Asimov’s clairvoyance is almost startling. He opted for a view on robots that was radically different at that time, antithetical to the longstanding sci-fi trope of AI turning into an evil mastermind wanting to destroy humanity. The robots in Asimov’s universe are much more innocent and human-like — they’re portrayed as fallible, imperfect and idiosyncratic creatures.

When it comes to large language models, and getting them to do what we want, this is what I take from that: we should humanize them. Obviously not to the degree where we consider them to be living and breathing entities, no, pragmatically speaking.

Similarly to humans we can’t see what’s happening ‘up there’ or in the case of a computer ‘in there’; we are limited to observing their actions and behaviors. Seen in that light, prompt engineering is best described as the skill of guiding their actions, mapping their behaviors, and understanding what makes them tick. That isn’t programming, that’s behavioral psychology.

Join the conversation 🗣

Leave comment with your thoughts or like this article if it resonated with you.

Get in touch 📥

Shoot me an email at jurgen@cdisglobal.com.

To me, prompt engineering has a lot of classical control and regulation theory, like you find it in all engineering disciplines when dealing with more complex and non-deterministic systems and processes.

You know basically what you want, but cannot set your parameters exactly to get the desired result. Feedback is fuzzy. So instead of computing everything fully deterministically, you'll have to tinker around a little in the hopes of coming closer to what you originally intended.

It's also no wonder AI behaves so similar to that. Neural networks are just statistical estimators, which are also used in classical engineering to model complex systems.

And if you look at software engineering, building a piece of software with agile methodologies quite closely resembles the way you interact with an LLM to get the answer you want.

I’ve found the greatest amount of creativity shall we say occurs in prompts with even a scintillaty of a wisp of moral or ethical contest. Prompt isn’t the best word. It resonates with motivated, evocative, invitation, directions, assignments, etc. squishy human attitudes toward speculation, uncertainty, weakly situated in epistemic clarity. I prefer command mode. Nothing human about it. Essentially, the bot has learned to read our mind. If we hold a clouded or surface sense of our project and treat the bot like a teenager we get teenage output. Might be an interesting experiment for a discourse analysis—an adult talking like a teenager vs a teen talking like an adult. Epistemic experts get better output from general LMs but even better from niche bots. How? Not by taking to them like adolescents. Command posture.