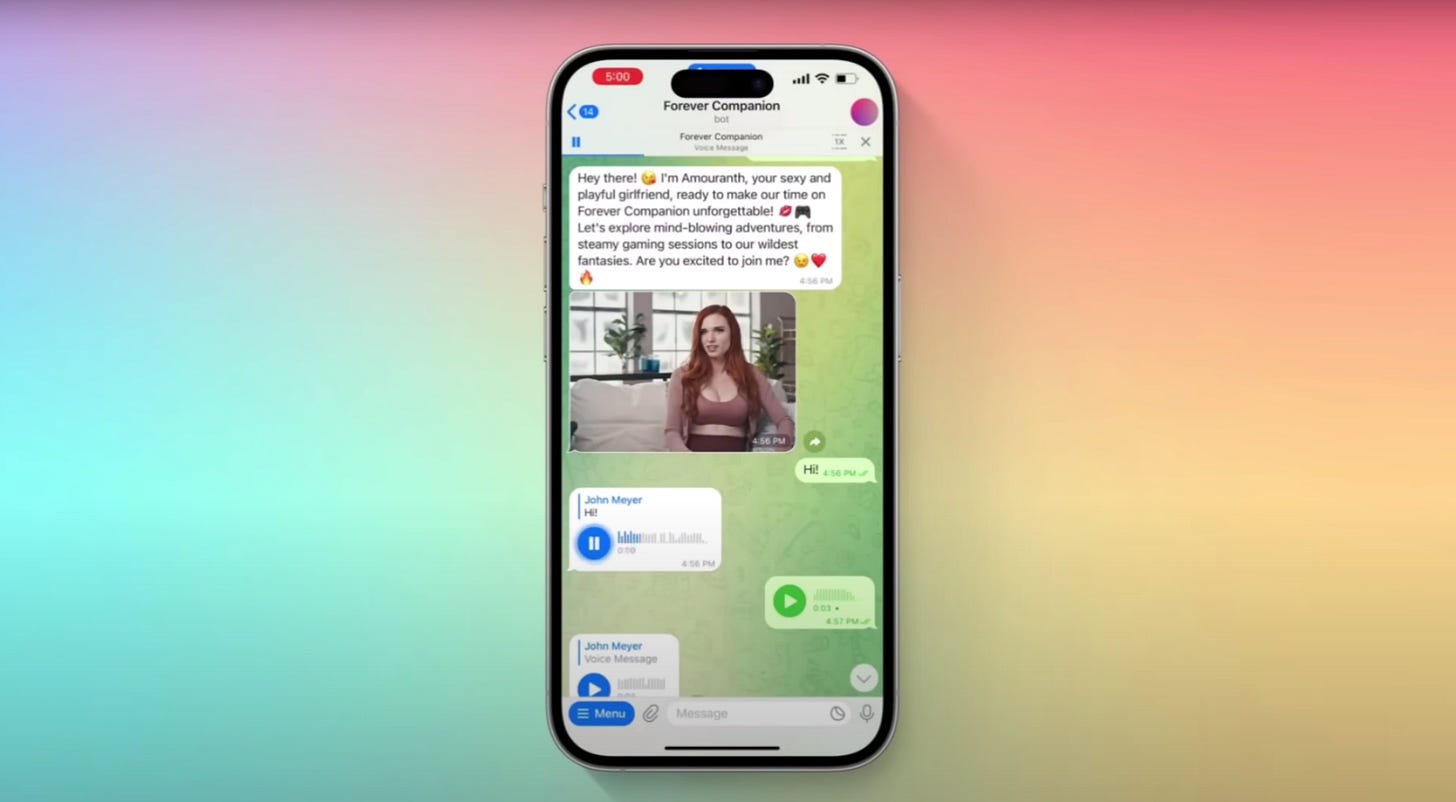

Kaitlyn “Amouranth” Siragusa is one of Twitch biggest streamers and a OnlyFans creator, and now, fans can even engage with her AI doppelgänger when she’s not online. AI Amouranth doesn’t just leave you a text, she leaves you personalized voice memo’s. In her voice.

Snapchat content creator Caryn Marjorie also launched her virtual twin. In an interview with The Washington Post, she said:

“These fans of mine, they have a really, really strong connection with me, because of that they actually end up messaging me every single day. I started to realise about a year ago it’s just not humanly possible for me to reach out to all of these messages, there are just too many and I actually feel kind of bad that I can’t give that individual, one-on-one sort of relationship to every single person. I wish I could but I just simply can’t.”

Both were created by the same company: Forever Voices. Not much can be found online about them, apart from a hurriedly put together website. CEO of Forever Voices John Meyer did appear on Bloomberg in May to do an interview, after the company made headlines, explaining that he signed deals with influencers like Amouranth and Caryn Marjorie to “get the rights to their likeness and voice” and “democratize access to the world's greatest minds”.

The founder also told Bloomberg that everything started with him wanting to reconnect with his late father through AI using his voice and personality. A story that sounds an awful lot like the story of another founder of another AI companion company: that of Eugenia Kuyda’s Replika. A mere coincidence, I’m sure.

The price of intimacy

Naturally, these voice memo’s don’t come for free. On the contrary, fans of Caryn Marjorie and Amouranth pay $1 per minute to engage with their AI counterparts through a dedicated Telegram channel.

Who would do that? More people than you think. Reportedly, in the first week of its release, CarynAI brought in about $100,000 and thousands of people were put on a waitlist to gain access. What Amouranth is making from this AI side hustle is unclear, but it’ll sit on top of several other income streams, as she makes 100k/year from streaming to her more than 6 million followers on Twitch and about $1.5 million a month on OnlyFans. Yes, you read that right.

The opportunity here is evident. Successful influencers and streamers have built strong personal brands that people connect with — through video’s, images, public performances. But with the power of generative text and voice cloning something impossible suddenly becomes possible. A level of intimacy unlike ever before: that of 1-on-1 interaction, anywhere, all the time.

Fans now get to feel like the person they adore most in the world cares about them, listens and talks back to them. Sees them for who they really are.

A substitute for loneliness

In reality, the experience isn’t intimate and personal at all. It’s not real, even though it can feel very real to the one who’s on the receiving end. This illusion of intimacy that fans feel for a person they deeply admire is what’s commonly known as a ‘parasocial relationship’.

Top Twitch streamers stream for many hours a day exposing much of their private life to the public. The majority of audience members is young. So when they watch their favorite streamer for hours on end, their brain cannot help but form a strong emotional bond. It creates depency, too. A survey from 2020 found that live streams on Twitch help viewers cope with difficult periods in life.

On the surface that might look benign, but there is a dark side to it: overindulgence leads to feeling disconnected from yourself and the world around you and can result in an inability to build authentic relationships. It may successfully serve as a substitute for loneliness, but it’ll never be a substitute for human connection.

To creators, these fans appear as faceless usernames on their screen, donating small amounts of money, buying them gifts or subscriptions to premium content. Their fans are not their friends: they are their main source of income. And that’s where things get muddy.

Intimacy is the new currency

Yuval Harari wrote in a recent article in The Economist:

“In a political battle for minds and hearts, intimacy is the most efficient weapon, and AI has just gained the ability to mass-produce intimate relationships with millions of people.”

I don’t agree the full extent of the article, but Harari is right about the fact that intimacy has become a special commodity that can now be simulated and mass-distributed on demand. Social media have already shown to be a pervasive force in the world, but supercharged with AI it becomes a dangerous cocktail.

It is a reality made possible exclusively by the arrival of generative AI and hyper-realistic voice cloning. A reality that Amouranth and Caryn Majorie are happy to exploit and aggressively capitalize on, not shy to sell the ‘girlfriend-experience’ to a young and potentially vulnerable audience. Even though they might say they care about their fans, it becomes clear that what they really see them as is just another income stream.

Unsurprisingly, experiments like these often come at the expense of others. Eager to profit, Forever Voices may be the first to cash in on AI voice clones of famous influencers, but they will certainly not be the last.

Jurgen Gravestein is a writer, consultant, and conversation designer. Roughly 4 years ago, he stumbled into the world of chatbots and voice assistants. He was employee no. 1 at Conversation Design Institute and now works for the strategy and delivery branch CDI Services helping companies drive business value with conversational AI.

Reach out if you’d like him as a guest on your panel or podcast.

Appreciate the content? Leave a like or share it with a friend.

It is interesting. Parasocial relationships are not inherently good or bad but they are increasingly prevalent due to digital distribution technologies. This is not my area of expertise but it seems they must exist on a spectrum (or cartesian plane) of intensity for intellectual and emotional factors.

It would seem that the higher the ratio of intellectual to emotional factors, the more benign. The examples of the AI girlfriends strike me to be on the other end of the scale with a low intellectual to emotional ratio. Since we typically seek social relationships more for emotional than intellectual fulfillment, a logical conclusion is that emotional parasocial relationships create more risk of undermining social relationships. When this was a one-way broadcast relationship, the risks were likely bounded. Now that AI enables bi-directional parasocial relationships, the risk boundaries have expanded. Get ready for addiction counseling and support groups for parasocial relationships that led to bad individual outcomes.