Here’s what you need to know:

A new study confirms that writers can benefit creatively from using generative AI, especially writers that are less creative themselves.

Similar results are found in studies with consultants, where underperformers suddenly perform on par or slightly below high performers.

On the other hand, the use of AI could lead to the ‘homogenization of ideas’ and a depreciation of human expertise, which would be a net negative.

↓ Go deeper (5 min read)

Does generative AI makes us more or less creative? That’s a question researchers from University College London and University of Exeter tried to answer by studying people’s ability to write short stories, with and without the help of AI.

Their findings were threefold. Firstly, having access to generative AI increases the average novelty and usefulness — two frequently studied dimensions of creativity —compared to unassisted writing. However, the gains from writing with AI benefit less creative people more than people who are naturally creative. And last but not least, while on average writers are better off, collectively a narrower scope of novel content is produced.

It poses an interesting dilemma: should we lift the bar for individuals if it harms our ability to innovate as a group?

Leveling the playing field

Making AI more accessible is commonly referred to as the democratization of AI. As more people get their hands on these AI tools, it levels the playing field. Can’t write? With AI you can. Can’t draw or paint? With AI you can. Don’t know how to code? Let AI write that code for you.

The best possible outcome is that everyone gets a little smarter. The study seems to confirm this is the case: people who are less creative suddenly perform on par or slightly below people that are considered more creative.

Similar results can be observed in other research. wrote in September of last year about a study he and other researchers had done with knowledge workers at Boston Consulting Group:

“Across a set of 18 tasks designed to test a range of business skills, consultants who had previously tested in the lower half of the group increased the quality of their outputs by 43% with AI help while the top half only gained 17%. Where previously the gap between the average performances of top and bottom performers was 22%, it shrunk to a mere 4% once the consultants used GPT-4.”

Taken at face value, this is awesome. What’s not to like? But there’s a downside, a footnote to the story that mustn’t be overlooked.

The homogenization of ideas

The fear is that widespread access to AI tools may lead to a ‘homogenization of ideas’. More people will start to use AI, but the less they know, the harder it will be for them to critically assess the quality or originality of the output.

Ironically, these folks are better off with AI than without, but collectively we are off worse. Overreliance can lead to a false sense of competence, as well as a diminished appreciation for specialized knowledge, eroding the value placed on actual experts who have decades of expertise and experience to draw on.

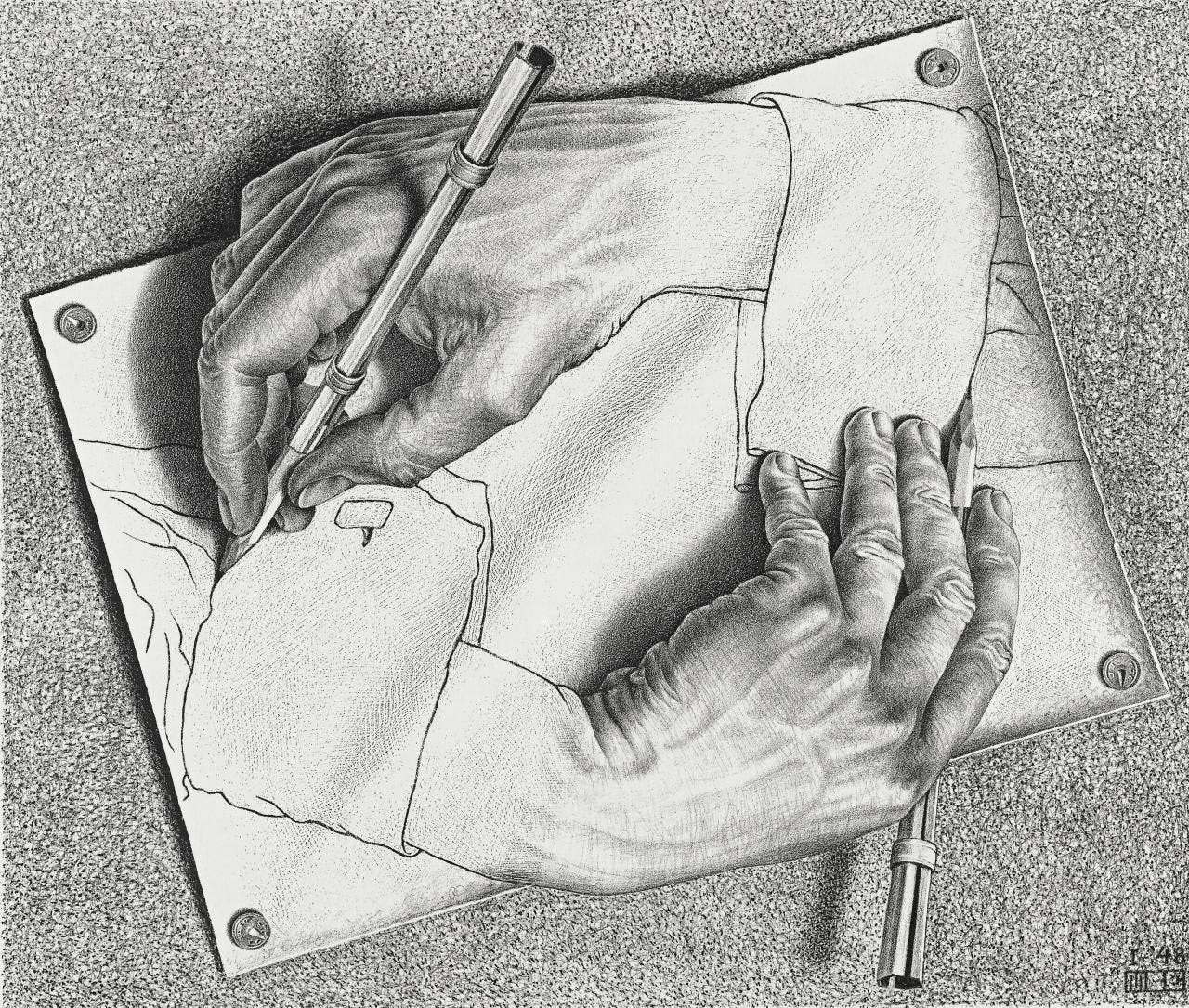

It’s a vicious circle — a self-perpetuating cycle of AI-generated content — that stifles diversity of thought. And as AI is being trained on itself, we are reminded of the paintings of of M. C. Escher: the hand that draws its own hand, the snake that eats its own tail.

Join the conversation 🗣

Leave a like or comment if this article resonated with you.

Get in touch 📥

Shoot me an email at jurgen@cdisglobal.com.

@Jurgen Gravestein Interesting. Just read the post by @Ben Dickson on research by UCLA on the impact of GenAI on creative writing.

Should complement yours.

You point to an important problem.

Your observation "More people will start to use AI, but the less they know, the harder it will be for them to critically assess the quality or originality of the output" is something I have been struggling with for the past year ... using genAI shifts the user's role away from creating to evaluating.

But that presupposes that the user has the knowledge/skill to evaluate.

This I think is a real concern for people who haven't gone through the painstaking process of learning a skill. There are no free lunches. A key challenge for education and L&D is not to simply teach users how to use genAI but also how to hone and develop skills without genAI so that they can properly evaluate and "critically assess" the generated output.

I am just not sure how this can be done in an expedient way.