Character-driven AI

Crafting AI's character isn't a feature, but a necessity.

Summary: While general-purpose assistants like ChatGPT, Gemini, and Claude have comparable abilities, their personalities are unique. Anthropic researchers emphasize that crafting AI’s character is essential in ensuring AI aligns with human values.

↓ Go deeper (8 min)

I’m a big fan of movies that are character-driven. It makes for a more interesting watch than when the only thing that is driving the story forward is the plot.

I think the same goes for AI. Carefully crafting the AI’s character can make the interactions more engaging and frankly more useful. Short and crisp responses convey a completely different feeling than when your AI assistant consistently goes off on long monologues. According to researchers from Anthropic, it can even help make the models safer.

To understand how, I’ll briefly touch upon the inner workings of these models — and make the case for why I think character building for general-purpose assistants is one of the most interesting open area’s of research.

Shaping the AI’s character

More so than the word ‘personality’, the word ‘character’ more broadly refers to someone’s beliefs, attitudes, and values. When someone is resilient and strong-willed, we say this person has character. Character drives action.

Few people realize that instilling AI with character isn’t just a cosmetic choice, it’s essential for shaping the model’s behavior.

Here’s how the folks at Anthropic put it:

“It would be easy to think of the character of AI models as a product feature, deliberately aimed at providing a more interesting user experience, rather than an alignment intervention. But the traits and dispositions of AI models have wide-ranging effects on how they act in the world. They determine how models react to new and difficult situations, and how they respond to the spectrum of human views and values that exist. Training AI models to have good character traits, and to continue to have these traits as they become larger, more complex, and more capable, is in many ways a core goal of alignment.”

Alignment is about making AI do what we want it do and refuse to do what we don’t want it to do. This is tricky, because these models aren’t your usual software. They are more akin to a well-trained dog than to a traditional computer program: they follow our instructions not because they are programmed but because they’ve been conditioned to do so.

I invite you to listen to this conversation between Stuart Ritchie (Research Communications at Anthropic) and Amanda Askell (Alignment Finetuning Researcher at Anthropic) if you want to dive deeper.

Besides alignment, there’s another reason to focus on more character-driven AI, namely: product differentiation. Let me explain.

The case for character-based AI

It’s not a secret that AI companies are scraping the Internet for data. Wikipedia, Reddit, academic papers, books, and the lot. The problem is that, because these models have all been trained on the same internet data, they’ve become virtually identical in terms of knowledge and capabilities.

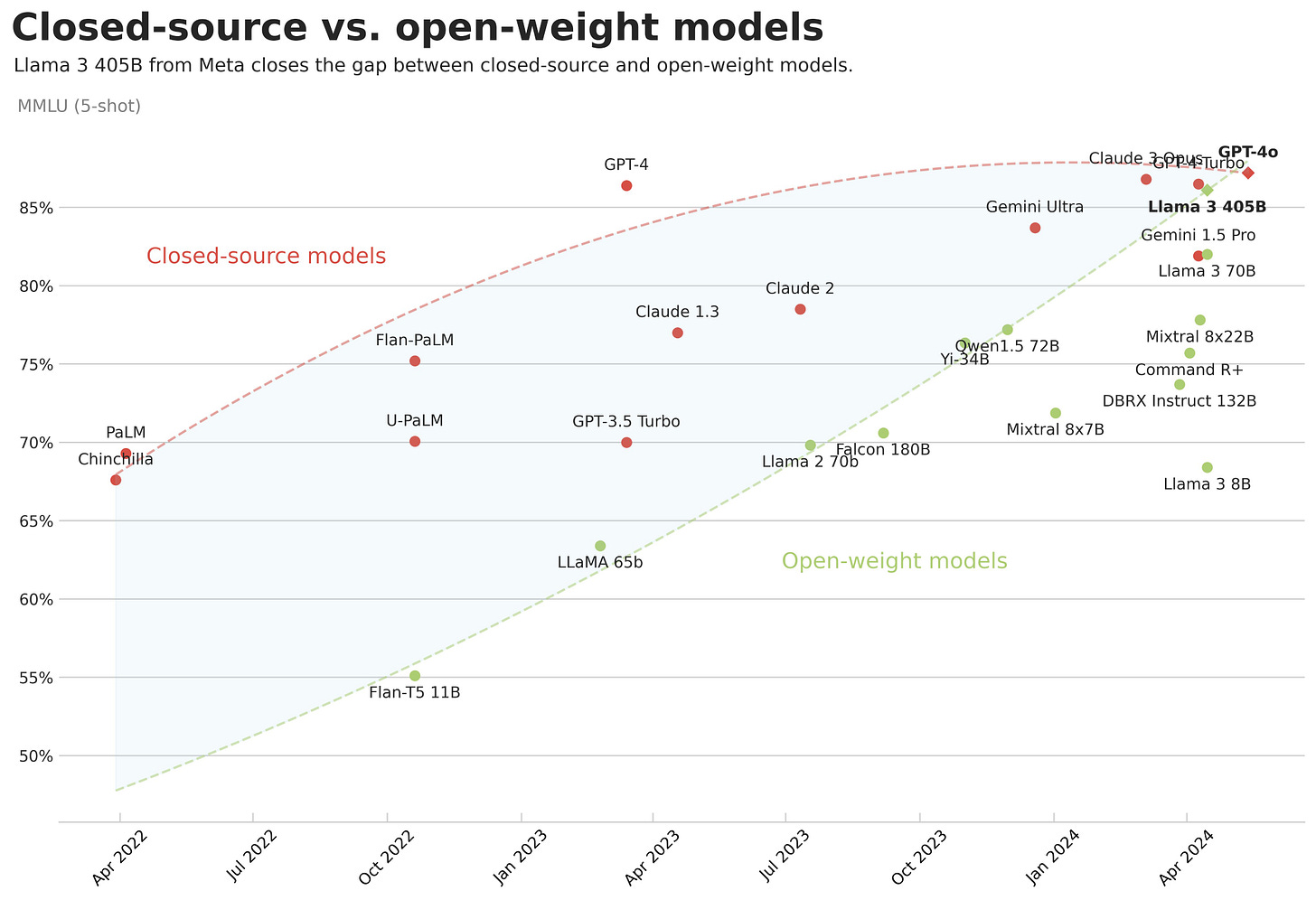

Not only do we have a handful of commercial models (from OpenAI, Google, and Anthropic) that all hover around the same level, the gap between closed-source and open-weight models seems to be closing, too.

If in the coming years progress remains incremental, intelligence-in-the-sky is destined to become a commodity (something I’ve written about before). This means margins will be razor thin and differentiation must be sought at the brand and application level. And this is exactly where personality can make a difference.

Because if your AI assistant is the product, character is everything.

Think about it: would you rather talk to an open-minded and curious entity or to something rigid and monotone? Would you rather ask advice to someone who generally respects your views (and maybe softly challenges them) or a booksmart know-it-all who constantly corrects your mistakes and makes you feel small?

My prediction: soon character-driven AI will be the number one thing that will win over people’s hearts and minds. Instead of obsessing over processing power, the real magic lies in creating AI with character.

Until next time,

— Jurgen

I'm sceptical. I find some of the "character-influenced" exchanges I've seen quite sinister, maybe even harmful. Whilst I can see how bias can be inherent within datasets, I see no transparency as to how character traits are imbued into an AI model, nor can I (so far) accept that human-like "personalities" are spontaneous attributes, somehow derived from within the "black box" as a consequence of training, nor that AI can demonstrate an opinion or a moral compass unless there is some kind of human intervention at playto make that happen. So my question Jurgen is, how exactly are these traits generated? Do they appear magically as a consequence or training, or is there some kind of additional intervention or overlay being applied so that factual interactions are couched within a particular "character"? If an AI is prompted to be "charitable" (as I'm hoping you will be in answering my questions...) what mechanism is at play to make that AI be "charitable" in its approach, do we understand the "neural" processes by which that happens? In short, is there explainability? If not, if we do not have full understanding or control, who is to say that the AI might not spontaneously and unexpectedly skew future interactions to make them potentially disruptive or harmful?

I am coming to this understanding, that you can deeply connect to a broken imperfect AI personality far more than you can to one "designed" on purpose. "Real" personality is stronger.